Appearance

Artificial Intelligence (AI)

2025-10-14 对冲基金经理Kuppy 播客Rebel Capitalist访谈

2025-10-10 Hedge Fund Manager Just Went Viral For Uncovering The AI Boom's FATAL FLAW

take or pay contract.

2025-10-05 An AI Addendum

2025-08-20 Global Crossing Is Reborn…

What’s a datacenter made of?? There are three main components; the building and land at roughly a quarter of the cost, all the power systems, wiring, cooling, racking, etc. at about 40% of the cost, and then the GPUs themselves at about 35% of the cost.

I’ve seen this story before—fiber in 2000, shale in 2014, cannabis in 2019. Each time, the technology or product was real, even transformative. But the capital cycle was brutal, the math unforgiving, and the equity holders were ultimately incinerated.

2024-05-30 Understanding TPUs vs GPUs in AI: A Comprehensive Guide

2023-08-23 1 Supplier Is A Winner Take All, Revealing The AI Head-Fakes

2023-05-19 Semi-Custom Silicon For AI and Video, Roadmap, Co-Design Partners

2020-07-24 TPU vs GPU vs Cerebras vs Graphcore: A Fair Comparison between ML Hardware

Artifical Analysis

Hardware > Infrastructure > Models > Applications

Google continues to stand out as the most vertically integrated from TPU accelerators to Gemini

Reasoning models, longer contexts, and agents are multiplying compute demand per user query

Increasing the size of both scale-up domains (single coherent system, eg. NVL72 connected with NVLINK) and scale-out domains (networked nodes, ethernet based networking technologies) allows the delivery of greater training compute

Inference techniques confined until recently to the frontier labs are becoming widely available – driven by DeepSeek’s open sourcing, NVIDIA Dynamo and upcoming work from open source projects including SGLang.

Key techniques include prefill/decode disaggregation and expert parallelism across dozens or hundreds of GPUs, along with novel load balancing techniques like scaling expert replicas depending on activation frequency.

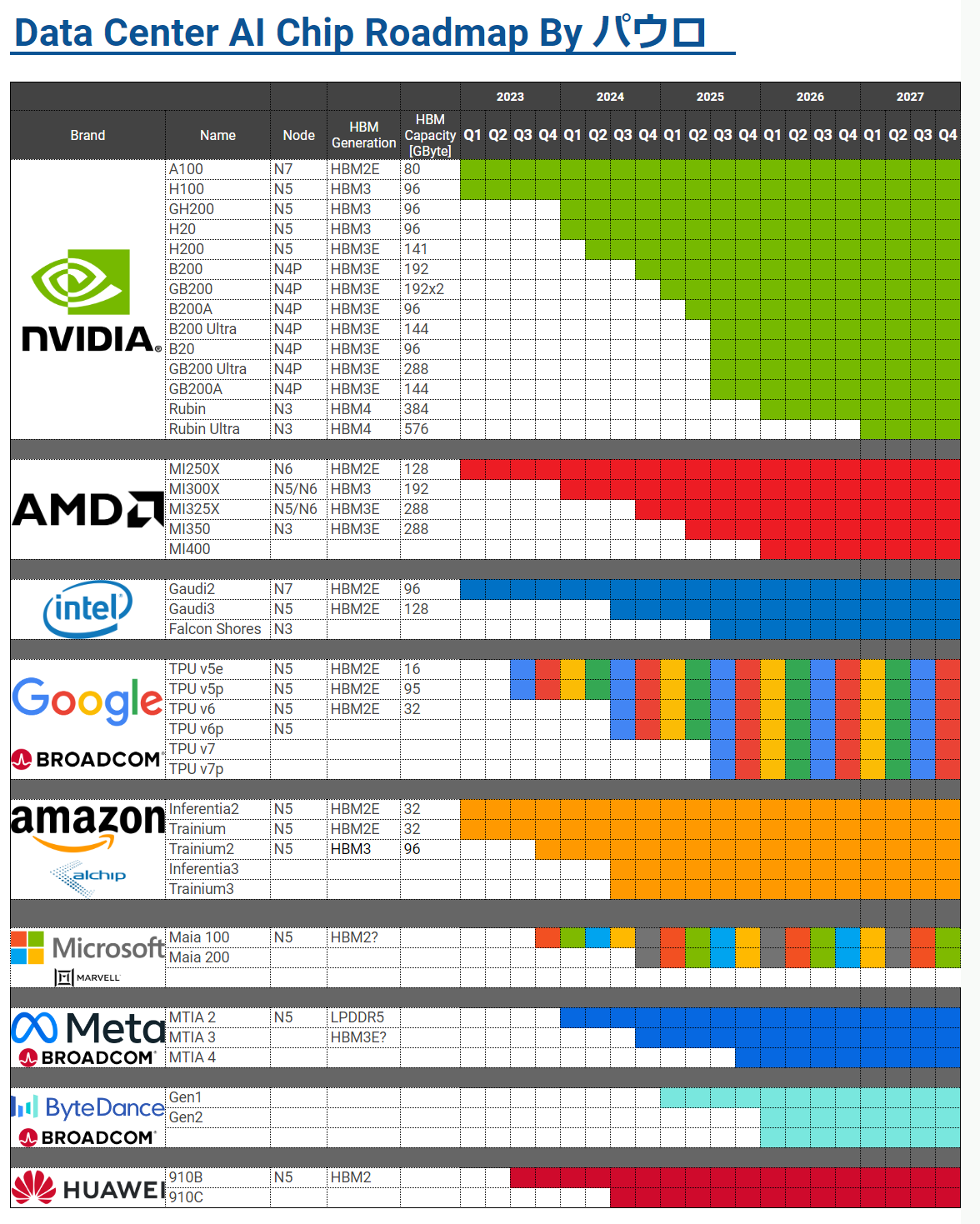

Huawei is emerging as China’s chip leader, designing chips and systems that may approach Hopper-level performance that are manufactured on a mix of TSMC and SMIC nodes

MLPerf Training

Google DeepMind

- Gemini

- Gemma

- Veo

- NoteLLM

- Imagen

- Lyria

StarGate

OpenAI, Oracle, and SoftBank are announcing five new U.S. AI data center sites under Stargate, OpenAI’s overarching AI infrastructure platform. The combined capacity from these five new sites—along with our flagship site in Abilene, Texas, and ongoing projects with CoreWeave—brings Stargate to nearly 7 gigawatts of planned capacity and over $400 billion in investment over the next three years. This puts us on a clear path to securing the full $500 billion, 10-gigawatt commitment we announced in January by the end of 2025, ahead of schedule.

In July, OpenAI and Oracle entered an agreement to develop up to 4.5 gigawatts of additional Stargate capacity. This represents a partnership that exceeds $300 billion between the two companies over the next five years. The three new sites—located in Shackelford County, Texas; Doña Ana County, New Mexico; and a site in the Midwest, which we expect to announce soon; combined with an additional potential expansion of 600 megawatts near the flagship Stargate site in Abilene, Texas—can deliver over 5.5 gigawatts of capacity. Together, these sites are expected to create over 25,000 onsite jobs, and tens of thousands of additional jobs across the U.S. We remain in the process of evaluating additional sites.

The other two Stargate sites being announced today can scale to 1.5 gigawatts over the next 18 months. These sites will be developed through a partnership by SoftBank and OpenAI that can scale to multiple gigawatts of AI infrastructure.

Oracle and OpenAI have entered an agreement to develop 4.5 gigawatts of additional Stargate data center capacity in the U.S.

Together with our Stargate I site in Abilene, Texas, this additional partnership with Oracle will bring us to over 5 gigawatts of Stargate AI data center capacity under development, which will run over 2 million chips.

This significantly advances our progress toward the commitment we announced at the White House in January to invest $500 billion into 10 gigawatts of AI infrastructure in the U.S. over the next four years. We now expect to exceed our initial commitment thanks to strong momentum with partners including Oracle and SoftBank.

Meanwhile, construction of Stargate I in Abilene is progressing and parts of the facility are now up and running. Oracle began delivering the first Nvidia GB200 racks last month and we recently began running early training and inference workloads, using this capacity to push the limits of OpenAI’s next-generation frontier research.

- 2025-01-21 Announcing The Stargate Project

The Stargate Project is a new company which intends to invest $500 billion over the next four years building new AI infrastructure for OpenAI in the United States. We will begin deploying $100 billion immediately.

The initial equity funders in Stargate are SoftBank, OpenAI, Oracle, and MGX. SoftBank and OpenAI are the lead partners for Stargate, with SoftBank having financial responsibility and OpenAI having operational responsibility. Masayoshi Son will be the chairman.

Arm, Microsoft, NVIDIA, Oracle, and OpenAI are the key initial technology partners.

As part of Stargate, Oracle, NVIDIA, and OpenAI will closely collaborate to build and operate this computing system.

OpenAI will continue to increase its consumption of Azure as OpenAI continues its work with Microsoft with this additional compute to train leading models and deliver great products and services.

Resource

Knowledge

Others

Google Gemini

Apple Intelligence

SemiAnalysis

AI Playground

karpathy

CUDA

The NVIDIA® CUDA® Toolkit provides a development environment for creating high-performance, GPU-accelerated applications.

The toolkit includes GPU-accelerated libraries, debugging and optimization tools, a C/C++ compiler, and a runtime library.

cuDNN

The NVIDIA CUDA® Deep Neural Network library (cuDNN) is a GPU-accelerated library of primitives for deep neural networks.

cuDNN provides highly tuned implementations for standard routines such as forward and backward convolution, attention, matmul, pooling, and normalization.

OpenMPI

The Open MPI Project is an open source Message Passing Interface implementation that is developed and maintained by a consortium of academic, research, and industry partners.

Metal

Metal powers hardware-accelerated graphics on Apple platforms by providing a low-overhead API, rich shading language, tight integration between graphics and compute, and an unparalleled suite of GPU profiling and debugging tools.

GPU Clouds

Google Cloud GPUs

Lambda

On-demand & reserved cloud NVIDIA GPUs for AI training & inference

SambaNova Systems

SambaNova strives to be the most efficient and adaptable AI platform on the planet. Our AI solution is designed to empower enterprises to control the trajectory of their data and AI future.

Cerebras

Today, Cerebras stands alone as the world’s fastest AI inference and training platform. Organizations across fields like medical research, cryptography, energy, and agentic AI use our CS-2 and CS-3 systems to build on-premise supercomputers, while developers and enterprises everywhere can access the power of Cerebras through our pay-as-you-go cloud offerings.

We have come a long way in our first decade. But our journey is just beginning.

Groq

With the seismic shift in AI toward deploying or running models – known as inference – developers and enterprises alike can experience instant intelligence with Groq. We provide fast AI inference in the cloud and in on-prem AI compute centers. We power the speed of iteration, fueling a new wave of innovation, productivity, and discovery. Groq was founded in 2016 to build technology to advance AI because we saw this moment coming.

Fireworks

MiniMax

MiniMax is a global AI foundation model company. Founded in early 2022, we are committed to advancing the frontiers of AI towards AGI via our mission Intelligence with Everyone.

Our proprietary multimodal models, led by MiniMax M1, Hailuo-02, Speech-02 and Music-01, have ultra-long context processing capacity and can understand, generate, and integrate a wide range of modalities, including text, audio, images, video, and music. These models power our major AI-native products — including MiniMax, Hailuo AI, MiniMax Audio, Talkie, and our enterprise and developer-facing Open API Platform — which collectively deliver intelligent, dynamic experiences to enhance productivity and quality of life for users worldwide.

To date, our proprietary models and AI-native products have cumulatively served over 157 million individual users across over 200 countries and regions, and more than 50,000 enterprises and developers across over 90 countries and regions.

A121 Labs

AI21 is pioneering the development of enterprise AI Systems and Foundation Models. Our mission is to build trustworthy artificial intelligence that powers humanity towards superproductivity.

We offer privately deployed models with unmatched performance and reliability with tailored solutions for every organization.

AI21 Labs was founded in 2017 by pioneers of artificial intelligence, Professor Amnon Shashua (founder and CEO of Mobileye), Professor Yoav Shoham (Professor Emeritus at Stanford University and former Principal Scientist at Google), and Ori Goshen (serial entrepreneur and founder of CrowdX) with the goal of building AI systems that become thought partners for humans.

AI21 Labs enables enterprises to design their own generative AI applications powered by our groundbreaking models at the core.

Our mission? To build trustworthy artificial intelligence that powers humanity towards superproductivity.

MidJourney

Moonshot AI

Mistral

We are Mistral AI, a pioneering French artificial intelligence startup founded in April 2023 by three visionary researchers: Arthur Mensch, Guillaume Lample, and Timothée Lacroix.

United by their shared academic roots at École Polytechnique and experiences at Google DeepMind and Meta, they envisioned a different, audacious approach to artificial intelligence—to challenge the opaque-box nature of ‘big AI’, and making this cutting-edge technology accessible to all.

This manifested into the company’s mission of democratizing artificial intelligence through open-source, efficient, and innovative AI models, products, and solutions.

upstage

Founded in 2020, we’re a dynamic team of 100+ top AI researchers, engineers, and business leaders with a proven track record of building AI solutions trusted by major enterprises worldwide. Our team collaborates remotely, with hubs in Seoul, San Francisco, and Tokyo.