Appearance

Alphabet(GOOGLE)

Investor Relations

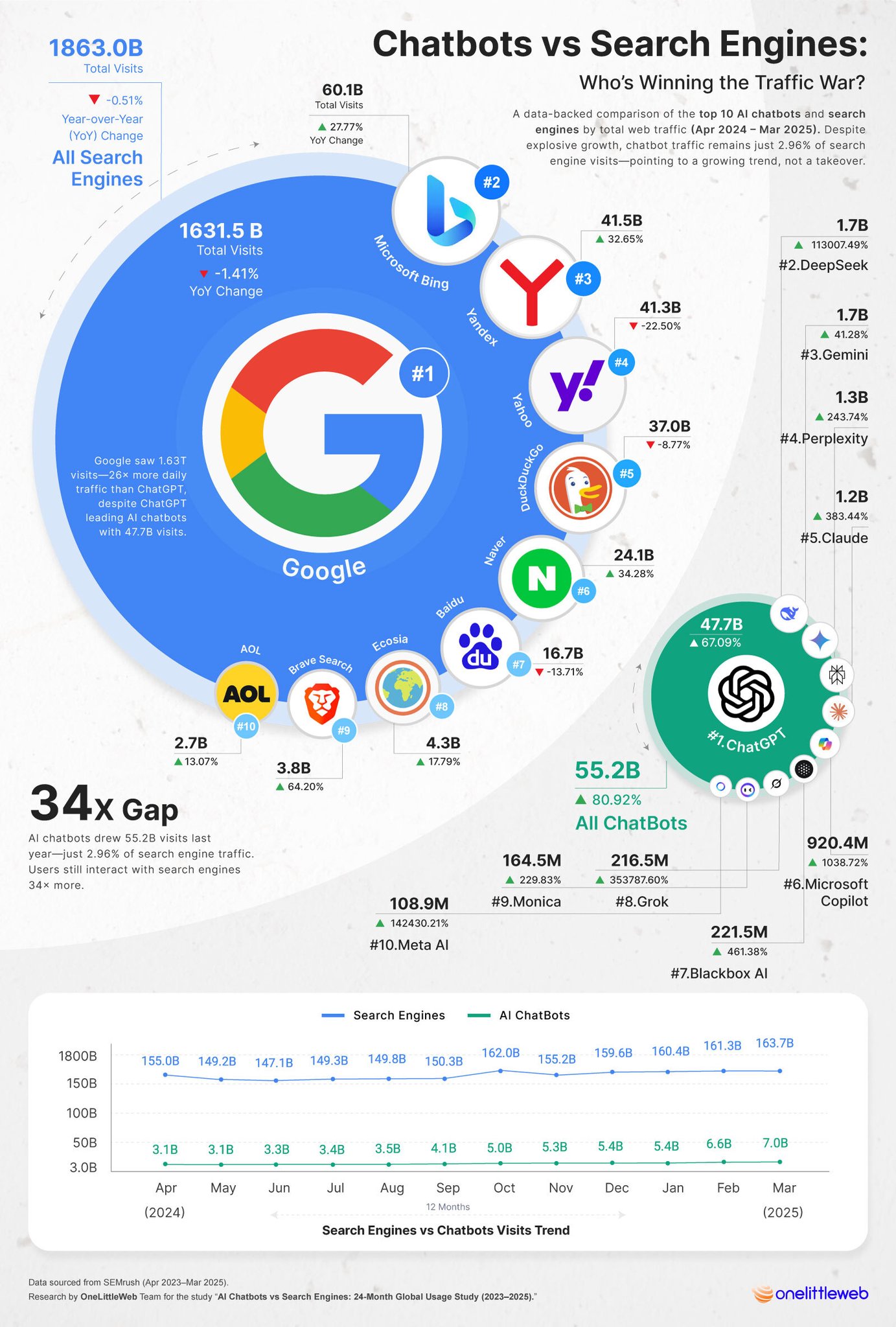

AI Chatbots traffic

Chatbots vs Search Engines

Articles

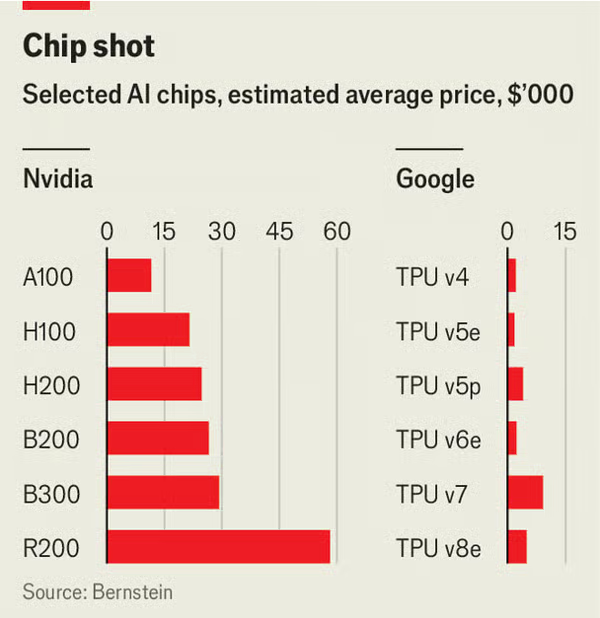

Nvidia’s customers have a big incentive to explore cheaper alternatives. Bernstein, an investment-research firm, estimates that Nvidia’s GPUs account for over two-thirds of the cost of a typical AI server rack. Google’s TPUs cost between a half and a tenth as much as an equivalent Nvidia chip (see chart). Those savings add up.

Jefferies, an investment bank, reckons Google will make about 3m of the chips next year, nearly half as many units as Nvidia.

- 2025-11-24 The self-driving taxi revolution is here

Waymo reached 1m monthly active users (MAUs) in the third quarter of 2025, according to Sensor Tower, a data provider, an 82% increase from the same period last year.

Americans currently spend around $50bn a year on ride-hailing.

Dara Khosrowshahi, Uber’s chief executive, has said that the market for self-driving technology could be worth $1trn or more in America alone.

Research conducted last year by Waymo with Swiss Re, an insurance giant, showed that its robotaxis generated 88% fewer property-damage claims and 92% fewer bodily-injury claims than the average human during 25m miles of driving, a performance which has improved further since.

A grisly accident in 2023 involving a robotaxi operated by Cruise, a competitor to Waymo, became existential after the company failed to provide full transparency during a federal investigation. General Motors, Cruise’s owner, subsequently shut its robotaxi service down.

Human drivers are estimated to account for 50-70% of the cost of a traditional ride-hailing service such as Uber, so stripping them out sounds like a quick way to undercut the incumbents. Yet all robotaxi services currently lose money, including Waymo. (In addition to receiving fistfuls of cash from Alphabet, it raised $5.6bn in a share offering to outside investors last year.)

That is because their vehicles are expensive; they are kitted out with costly safety features and trained on pricey AI chips. And unlike ride-hailing firms such as Uber, which rely on owner-drivers, robotaxi operators have to cover the cost both of acquiring and managing their fleets, including cleaning, fuel, maintenance and parking. They also need human supervisors to monitor their vehicles in case things go wrong.

As a result, Augustin Wegsheider of BCG, a consultancy, estimates that self-driving vehicles cost about $7-9 a mile to operate, compared with $2-3 a mile for traditional ride-hailers and $1 a mile for personal cars.

“They haven’t even scratched the surface because mission number one was making the operation safe.” McKinsey estimates that it will take a decade to bring costs below $2 a mile.

Estimates for the cost of Waymo’s current generation of robotaxis range from $130,000 to $200,000 each.

For now, Waymo is the front-runner, at least in America. Its self-driving technology has been designated as Level 4, which means that in pre-approved areas its vehicles can operate without direct human supervision, whereas Tesla’s robotaxis are between Levels 2 and 3, meaning they still need a supervisor in the car. Because of Waymo’s focus on safety, it has kitted out its cars with more expensive hardware than Tesla. For instance, its latest vehicles have 13 cameras, six radars and four LiDARs, whereas Tesla relies solely on eight cameras. That has helped it win over regulators.

Robotaxi providers will also have to decide how to work with the big ride-hailing companies, particularly Uber. In 2020 it abandoned an effort to develop its own self-driving technology, but has since set its sights on becoming the preferred booking platform for robotaxis.

Uber’s bet is that human drivers and robotaxis will co-exist for years to come. Eventually, however, robotaxis will become the cheaper option, says Sarfraz Maredia, Uber’s head of autonomy, which is why the company is manoeuvring itself into position now. Its customer base gives it a big advantage; Sensor Tower reckons Uber has 42m MAUs, dwarfing Waymo.

- 2025-11-23 AI tokens are surging, but are profits?

In October Google, a search giant, said its systems process around 1.3 quadrillion tokens each month across all its products, an eight-fold increase from February.

Google deploys them to produce “AI overviews” that summarise web pages instead of displaying a list of links. Barclays, a bank, estimates that these summaries account for over two-thirds of Google’s total token output.

Another is that the models themselves are becoming more verbose. As LLMs grow more sophisticated, they produce longer answers. EpochAI, a research firm, finds that the average number of output tokens for benchmark questions has doubled annually for standard models. “Reasoning” models, which explain their approach step by step, use eight times more tokens than simpler ones—and their usage is rising by about five-fold every year. Newer models are likely to follow this trend, meaning token growth is likely to continue.

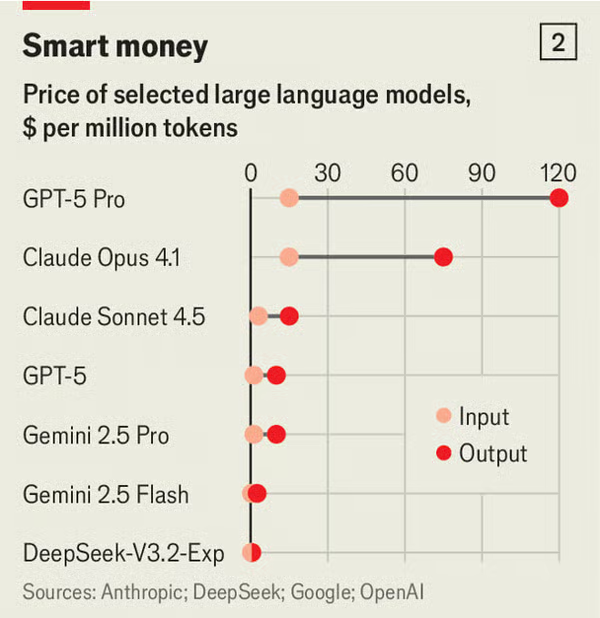

Yet cheaper tokens have not made AI cheap. Generating responses remains costly, says Wei Zhou of SemiAnalysis, a consultancy, because the models keep improving—and growing. So even as the price of tokens falls, better, more verbose models mean more tokens must be generated to complete a task. Thus Mr Zhou finds it “hard to imagine” a future where the marginal cost of providing AI services falls close to zero.

That is a problem for modelmakers because competition limits the margins they can add to their services. OpenAI charges developers about $120 per million output tokens for its most advanced model, GPT-5 Pro. DeepSeek, a Chinese rival, offers an open-source model at a fraction of the price (see chart 2). With many users increasingly willing to trade quality for cost, price pressure will only intensify.

The Wall Street Journal reports that by 2028 OpenAI expects its operating losses to reach $74bn—around three-quarters of projected revenue.

Today, we are announcing that we plan to expand our use of Google Cloud technologies, including up to one million TPUs, dramatically increasing our compute resources as we continue to push the boundaries of AI research and product development. The expansion is worth tens of billions of dollars and is expected to bring well over a gigawatt of capacity online in 2026.

Anthropic now serves more than 300,000 business customers, and our number of large accounts—customers that each represent more than $100,000 in run-rate revenue—has grown nearly 7x in the past year.

Anthropic’s unique compute strategy focuses on a diversified approach that efficiently uses three chip platforms–Google’s TPUs, Amazon’s Trainium, and NVIDIA’s GPUs. This multi-platform approach ensures we can continue advancing Claude's capabilities while maintaining strong partnerships across the industry. We remain committed to our partnership with Amazon, our primary training partner and cloud provider, and continue to work with the company on Project Rainier, a massive compute cluster with hundreds of thousands of AI chips across multiple U.S. data centers.

In total, Anthropic will have access to well over a gigawatt of capacity coming online in 2026.

Anthropic will have access to up to one million TPU chips, as well as additional Google Cloud services, which will empower its research and development teams with leading AI-optimized infrastructure for years to come. Anthropic chose TPUs due to their price-performance and efficiency, and the company's existing experience in training and serving its models with TPUs.

Anthropic and Google Cloud initially announced a strategic partnership in 2023, with Anthropic using Google Cloud's AI infrastructure to train its models and making them available to businesses through Google Cloud's Vertex AI platform and through Google Cloud Marketplace. Today, thousands of businesses utilize Anthropic's Claude models on Google Cloud, including Figma, Palo Alto Networks, Cursor, and others.

AI has accelerated startup innovation more than any technology since perhaps the internet itself, and we’ve been fortunate to have a front row seat to much of this innovation here at Google Cloud. Nine of the top ten AI labs use Google Cloud, as does nearly every AI unicorn and more than 60% of the world’s gen AI startups overall.

These startups are building with our differentiated AI stack, which offers leading technology and choice at every layer, including a variety of TPU and GPU specialized chips for compute, and a broad range of models that excel at coding, image and video generation, collaboration, and more.

Startup customers: Afooga, Anara, Aptori, aSim, CerebraAI, Clavata.ai, Clip Media, CoVet, ColomboAI, Corma, Factory, Gobii, InstaLILY, Inworld AI, Krea.ai, Lovable, MaestroQA, Markups.ai, MLtwist, MNTN, Mosaic, OpenArt, OpenEvidence, Owl.AI, Parallel, Prodia, Provenbase, Qualia Clear, Rembrand, Resolve AI, SandboxAQ, Satlyt, Satisfi Labs, Savvy, Skyvern, Subject.com, Tali.ai, Tinuiti, Toonsutra, turbopuffer, Upwork, Visla, Windsurf, Zapia AI, Zefr.

In an interview with TechCrunch, Windsurf CEO Jeff Wang said the startup works with a variety of cloud providers, including Google Cloud, but it’s hard to say which company it uses the most. Lovable declined to comment.

Part of the reason so many AI startups work with Google Cloud are the generous deals it offers. Many of the AI startups Google works with started on its Google for Startups Cloud Program, in which it offers $350,000 in cloud credits. Google Cloud also offers a dedicated cluster of Nvidia GPUs for startups in the Y Combinator accelerator program.

Google is deepening our roots in the UK with the opening of our new data centre in Waltham Cross, Hertfordshire.

It’s part of a £5 billion investment including capital expenditure, research and development, and related engineering over the next two years – and encompasses Google DeepMind with its pioneering AI research in science and healthcare. It will help the UK develop its AI economy — advancing AI breakthroughs and supporting a projected 8,250 jobs annually in the UK.

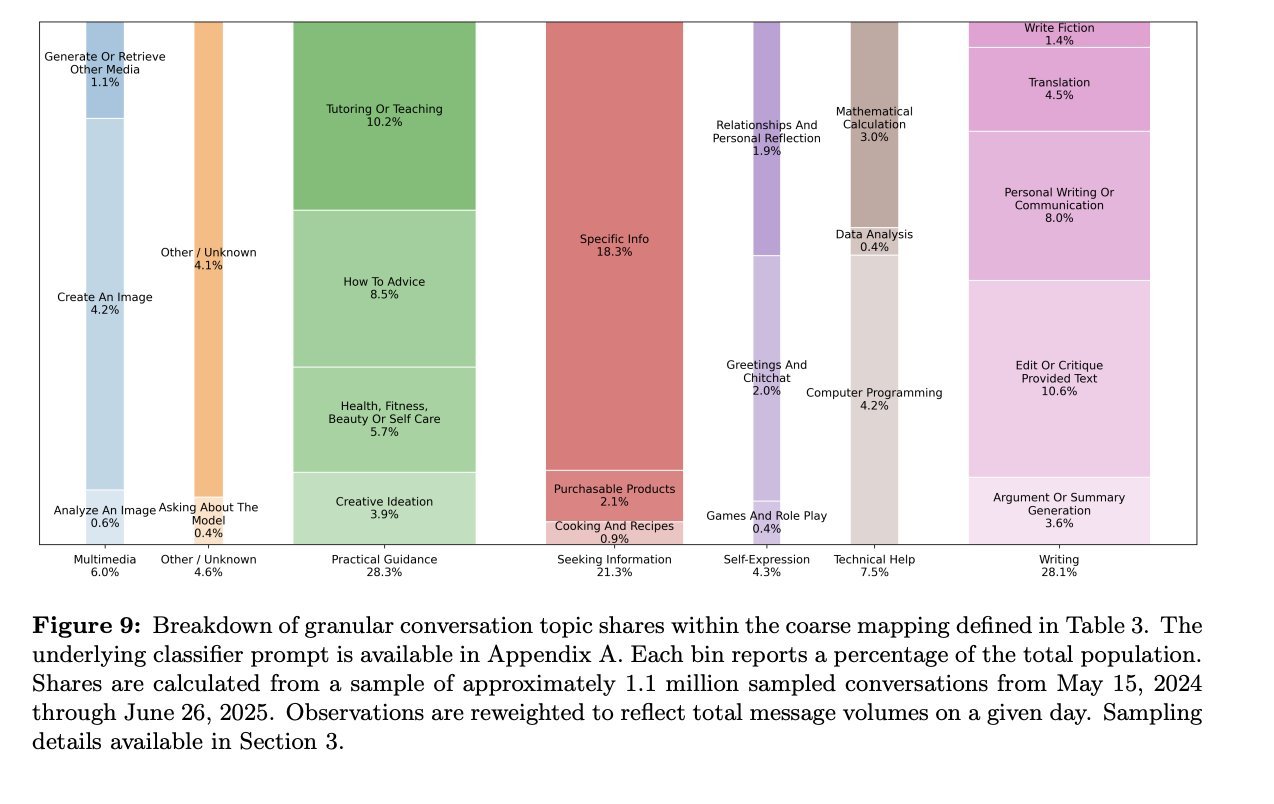

- 2025-09-16 ChatGPT usecases

For example, if you're running a large-scale cluster, we have two times the power efficiency. Meaning you get two times the FLOPS per watt. And with power now the scarce resource, you get a lot more capacity. We're typically seeing about a 50% performance delta between us and other players, and if you look at the total capacity you can get on a single system, you can get 118 times more throughput through our systems than you can from the next player.

And finally, Google has been at the forefront of most of the software that people are using for training. For example, compilers like JAX, XLA, Pathways, and all this software expertise allows us to optimize the stack.

We see demand from four customer segments. AI Labs, traditional enterprises, capital markets, high-performance computing applications.

On this infrastructure, we offer a suite of models. Not just Alphabet's, but 182 leading models from the industry.

Our own models fall in four categories: leading models for large-scale generative AI applications, Gemini; a leading suite of what's called diffusion models. Diffusion models create images, video, audio, speech, et cetera; a third set of models around scientific computation, For example, our time series model is used by many firms in financial services to do numerical prediction of sequences. Molecular design, we offer a model to help people design molecules which is getting a lot of interest in the pharmaceutical industry.

We're also very focused on operating discipline to improve operating margins. The three big areas of focus:

- One is making sure we're super efficient from the point of view of using our fleet and our machines so that we get capital efficiency.There's many hundreds of projects that people have done to optimize.

- We're improving our go-to-market organization, now has a large customer base to sell to. And selling to existing customers is always easier than selling to new customers, so it helps us improve the cost of sales as a percentage of revenue.

- finally, we're also building on a large suite of products already. So it helps us improve our engineering productivity. You see that in our results. We're growing top line and operating income.

But in the past, people chose cloud primarily as a mechanism to get developer efficiency . ... The big driver now is I really want to transform the organization. Can you help me by bringing AI expertise and products to help me?

we see organizations using AI in four domains: build digital products, transform customer service, streamline the core of the company in the back office, using it in their IT departments.

If you look at the work we do with capital markets, applying AI to synthesize data from information sources and then use it to actually feed algorithmic models.

There's dense models, mixture of experts, sparse models, do you need a sparse core or not. So we offer a range of accelerators.

PUE(Power Usage Effectiveness)电力使用效率

Now the Court has imposed limits on how we distribute Google services, and will require us to share Search data with rivals. We have concerns about how these requirements will impact our users and their privacy, and we’re reviewing the decision closely. The Court did recognize that divesting Chrome and Android would have gone beyond the case’s focus on search distribution, and would have harmed consumers and our partners.

Google will not have to sell its Chrome browser in order to address its illegal monopoly in online search, DC District Court Judge Amit Mehta ruled on Tuesday.

Besides letting Google keep Chrome, he’ll also let the company continue to pay distribution partners for preloading or placement of its search or AI products. But he did order Google to share some valuable search information with rivals that could help jumpstart their ability to compete, and bar the search giant from making exclusive deals to distribute its search or AI assistant products in ways that might cut off distribution for rivals.

In his 230-page ruling, Mehta explained that even though Google’s default status as the search engine on Chrome “undoubtedly contributes to Google’s dominance in general search,” forcing Google to sell it is ultimately “a poor fit for this case.”

Mehta also feared that upending Google’s payments to search distribution platforms would have negative effects rippling across the ecosystem. Banning these kinds of payments to companies like Apple and Mozilla for default placement on their browsers and devices could theoretically “bring about a much-needed thaw,” Mehta said, and even encourage a company like Apple to enter the search market itself. But, he concluded, granting such a remedy risks harming phone and browser makers by denying them significant revenue, while Google gets to keep its money while likely maintaining much of its user base.

here is definitely truth to the fact that people use[GenAI] products to do what we would call informational tasks.”

Google’son-device LLM is called Gemini Nano. Google’s Gemini app runs oncloud-based models, not Gemini Nano. AICore is an Android software module developed by Google that supports the useof on-device LLMs such as Gemini Nano. AICore currently only supports Google’s on-device Gemini Nano model.

AICore does not prevent other models from accessing a device’s NPU or TPU. Other on-device model providers could work with OEMs and chipmanufacturers to access a device’s accelerators. But an OEM may not want to add a second system service alongside AICore and may prefer “to simply ship with a system service that allowed for plug-and-play use of models.”

The capacity to run multiple on-device AI models can be limited by the device’sstorage and random-access memory (“RAM”). (stating that“how many different models can exist [on a single device] kind of depends on how big the modelsare” but that “right now there would be no problem on these premium devices having multiplemodels on storage” and that “practically speaking, . . . when you’re using one, you have it in RAM,and then when you’re not using it, you swap it out and you swap the other one in”). A single device may still have multiple on-device models—Samsung devices, for example, have both GeminiNano and Samsung’s own model, Gauss, on the device.

Google uses its Google CommonCorpus (“GCC”) to pre-train its Gemini GenAI models. GCC is a dataset that involves large amounts of information scraped from the web and stored in a repository called Docjoins, which is “a data structure that Google uses tostore URLs.” (“The main corpus of Docjoins is a large repository of the documents publicly available on the web and visited at least once by Googlebot in the last few months. It currently consists of over xxxx documents... By comparison, the external Common Crawl corpus is much smaller, with only a bit over 3 B in the latest release.”);.

After a model is pre-trained, “[t]here is a second stage called post-training where models are exposed to data that imparts the different capabilities that [the creators] want them tohave.” (“Pretraining is the first process in model training. And so—it occurs once. And the subsequent training process is called post training which is modifying, further modifying that pretrained model.”). For instance, if the model’s creators want the model to be good at writing code or answering questions, then they canpost-train the model on data particular to these areas. (agreeing that “an example” of post-training“would be fine-tuning a foundation model to be able to answer Q and A”). All-purpose models can be post-trained to accomplish more than one task through exposure to large numbers ofdatasets encompassing broad collections of information.

It is a common business practice to filter your models in various ways, remove duplicates,remove spam, garbage, inappropriate content. There’s a lot of stuff out there that you don’t wantto put into your models . . .

The capabilities of an LLM are limited by the content of its training data and when that training occurs, and their accuracy is similarly circumscribed by content and timing. Importantly, (1) if information is not in an LLM’s training data, it will not be able to produce a factual response to a query that seeks such information; (2) LLMs can provide reasonably accurate responses for frequently seen data but struggle to do so for lesser-known information; and(3) LLMs can forget the content of data they have previously been exposed to and thus cannot store information in a “lossless way.”

As noted, one key limitation is that LLMs have a knowledge cutoff. An LLM trained on data last updated in October2024, for example, could not answer queries asking about Taylor Swift’s 2025 engagement to Travis Kelce. (discussing how LLMs “do not contain current events” as “[t]hey’re only ever limited to things that were . . . present in the training data at the time of its training, and they don’t update live”). Retraining an LLM on an updated dataset“takes weeks or months” and thus the LLM would be unable “to answer a user’s query about stuff that had happened the same day using this kind of mechanism.” .Moreover, training is costly. (noting that adding more recent documents to pre-training data is expensive).

Another limitation is the problem of so-called “hallucinations,” facts made up byGenAI products “that are maybe probable to be true but not actually true.” (“A hallucination is essentially a statement that might sound factual but is really just generated by the language model.”). If a user poses a query that asks for information outside of its training data, the LLM can generate an incorrect response based on what it views to be the most probabilistic response. (noting that if an LLM “doesn’t have access to the right tools such as search or, you know, it was just told to give a response even if you don’t know theanswer, it will do its best to give a response and generate a response that it thinks you’d be satisfied with”).

“Grounding” and retrieval-augmented generation (“RAG”) provide a solution to problems of factuality and recency. RAG is agrounding technique, and the terms are sometimes used interchangeably.

To ground its Gemini models, Google uses a proprietary technology called FastSearch. FastSearch is based on RankEmbed signals — a set of search ranking signals—and generates abbreviated, ranked web results that a model can use to produce a grounded response. FastSearch delivers results more quickly than Search because it retrieves fewer documents, but the resulting quality is lower than Search’s fully ranked webresults.

Google does not make FastSearch directly available to third parties through an API.Id. Rather, the technology is integrated into a Google Cloud offering called Vertex AI, which is available to third parties to ground on Google Search results or other datasources. Vertex customers do not, however, receivethe FastSearch-ranked web results themselves, only the information from those results. Google limits Vertex in this manner to protect its intellectual property. Google has multiple Vertex grounding agreements with third parties.

Google also uses the Vertex service to ground web results for the Gemini app. The Gemini app, however, receives through Vertex access to portions of other Search features, like the Knowledge Graph, that are not available to third parties.

Google has invested in Anthropic.

DeepSeek has open-sourced a pre-trained foundationmodel, which at least one company, Perplexity, has used to post-train and develop its GenAIofferings.

(“Meta, who primarily distributes their GenAI products through their otherproperties like Instagram and WhatsApp and Facebook has also had success in getting these products into consumers’ hands.”). Google has partnered with Meta to provide a Search API forMeta to ground its LLMs.

OpenAI previously sought out a partnership with Google for grounding, but Google declined.

In December 2024, Apple began integrating ChatGPT and OpenAI technology into its devices to power Apple Intelligence, Apple’s AI experience integrated into its devices, in exchange for revenue share payments.

Perplexity does not train its own foundational model but rather relies on foundational models pre-trained by other GenAI companies (like Meta and DeepSeek) and post-trains them from there. Perplexity’s consumer-facing products include its website(Perplexity.ai), a web app, mobile apps, and a recently launched web browser that integrates its GenAI. Though most of Perplexity’s consumers use the company’s free chatbot through which it earns some revenue through ads placed below query responses, it also offers a paid subscription version. Like other GenAI companies, Perplexity’s valuation has surged over the past couple of years. (discussing Perplexity’s $1 billion valuation in spring 2024 and $9 billion valuation in December 2024)

Grok is trained on xAI’s own foundation model. xAI integrates Grok into X.

“Q. How would you describe the competitive space that the Gemini appoccupies? A. I would say I don’t think I’ve seen a more fierce competition ever in my 20-some years of working in technology.”

OpenAI calculated its share of the U.S. market as of December 2024 to be approximately 85%,with Claude at 3%, Gemini at 7%, and Perplexity and Copilot making up the remainder.

Google estimated that as of March 28, 2025, its Gemini app had roughly 140 million daily queries, with ChatGPT at 1.2 billion, MetaAI at over 200 million, Grok at 75 million, DeepSeek at 50 million, and Perplexity at 30 million.

Rival GenAI products have had some success in obtaining distribution with OEMs and other companies. OpenAI, for instance, has partnered with Apple, T-Mobile, Yahoo, DuckDuckGo, and Microsoft.

Perplexity hasa distribution deal with Motorola under which Motorola will preload Perplexity’s application onto new smartphones, although the agreement is not exclusive, the application will not be on the homescreen, and it will not be available via a wake word.

Motorola has also agreed to partner with Microsoft’s Copilot.

AI technologies have the potential to transform search” but “AI has not supplanted the traditional ingredients that define general search.

AI Overviews has potentially strengthened Google’s position in the GSE market.Since its introduction, Google Search queries in the United States have increased 1.5 to 2%.

[N]avigational queries are not a core use case of Perplexity. “Google’s top five queries by query volume are navigational queries and nearly 12% of all Google queries are navigational queries.” Further, commercial queries are not, at present, a common use case for GenAI applications and thus far have not cannibalized commercial queries on GSEs. (discussing the absence of cannibalization today but believing future cannibalization may occur based on the quality of a model, its pre-training data, and its grounding capabilities); (AI chatbots have not mastered commercial queries, but chatbot developers are increasingly introducing commercial concepts).

Even so, “there’s nothing which is fundamentally different between commercialand non-commercial queries.” explaining that a GenAI chatbot would likely need to be grounded with a search index or with retailers’ databases in order to answer commercial queries,so that it “know[s] actively what are the current products available for sale and what are theirprices”)

Today, the Gemini app drives little traffic to Google Search. (“I would say Gemini does not drive much, if any, meaningful traffic to Search today.”); (“I actually think if the user has to search after using Gemini, it’s a failure of the product. Like,the point of Gemini is to help you answer the question and help you get things done with the AI.If you then have to use another tool, you know, that’s not our desired outcome . . . .”).

“Circle to Search” is a recently launched search access point by Google for Android devices. It allows a user to execute a query by simply drawing a circle on a device’s display screen. Circle to Search returns a SERP in response to the “circled” query. An OEM must modify its user interface to allow Circle to Search to operate, but the search functionality itself is built on the open-source Android platform. An OEM can select the default search engine that will answer the query, which may be Google or some other search product that has the capacity to execute a“circle” query. (confirming that Circle to Search can be used with a search application other than Google and discussing Perplexity’s visual search offering).

“Google Lens” is a recent visual search capability built into Chrome. Google Lens’s current integration in Chrome only works if Google Search is set as the default GSE in the browser. (describing how visual search works with and without Google Search as the default GSE). Apple and Google entered into an agreement to bring Google Lens functionality to Apple devices in August 2024, with Google paying a revenue share on ads clicked on GoogleLens-generated SERPs.

Google has entered additional agreements with Motorola and its parent company,Lenovo.

Under the remedies ordered today, Google will be barred from entering or maintaining exclusive contracts relating to the distribution of Google Search, Chrome, Google Assistant, and the Gemini app. Google cannot enter or maintain agreements that (1) condition the licensing of any Google application on the distribution, preloading, or placement of Google Search, Chrome, Google Assistant, or the Gemini app anywhere on a device; (2) condition the receipt of revenue share payments for the placement of one Google application on the placement of another Google application; or (3) condition the receipt of revenue share payments on maintaining Google Search, Chrome, Google Assistant, or the Gemini app on any device, browser, or search access point for more than one year; or (4) prohibit any partner from simultaneously distributing any other GSE, browser, or GenAI product.

In addition, Google will have to make certain search index and user-interaction data available to certain competitors. Google will also be required to offer certain competitors search and search text ads syndication services, which will open up the market by enabling rivals and potential rivals to deliver high-quality search results and ads and compete with Google as they develop their own capacity.

- 2025-07-14 Cognition’s acquisition of Windsurf

The acquisition includes Windsurf’s IP, product, trademark and brand, and strong business. Above all, it includes Windsurf’s world-class people, some of the best talent in our industry, whom we’re privileged to welcome to our team.

In the immediate term, the Windsurf team will continue to operate as they have been, and we will remain focused on our work of accelerating your engineering with Devin. Over the coming months, we’ll be investing heavily in integrating Windsurf’s capabilities and unique IP into Cognition’s products.

$82M of ARR and a fast-growing business, with enterprise ARR doubling quarter-over-quarter.

A user base that includes 350+ enterprise customers and hundreds of thousands of daily active users.

Google is paying $2.4 billion in license fees as part of the deal to use some of Windsurf's technology under non-exclusive terms, according to a person familiar with the arrangement. Google will not take a stake or any controlling interest in Windsurf, the person added.

Windsurf CEO Varun Mohan, co-founder Douglas Chen, and some members of the coding tool's research and development team will join Google's DeepMind AI division.

The deal followed months of discussions Windsurf was having with OpenAI to sell itself in a deal that could value it at $3 billion, highlighting the interest in the code-generation space which has emerged as one of the fastest-growing AI applications, sources familiar with the matter told Reuters in June.

- 2025-07-11 The Next Stage of Windsurf

We’re excited to announce that Windsurf and Google have entered into an agreement that will help kick-start this next phase. As part of the agreement, Varun, Douglas, and some members of our R&D team will join Google. Most of Windsurf’s world-class team will continue to build the Windsurf product for the enterprise and enable our customers to maximize the outcomes from adopting this technology. Effective immediately, Jeff Wang, Windsurf’s Head of Business, has stepped into the role of interim CEO. Jeff has been part of Windsurf’s journey since mid-2023 and has served across many roles and capacities as the team has expanded and our projects grew more ambitious. Simultaneously, Graham Moreno, Windsurf’s VP of Global Sales, has stepped into the role of President. Graham has been the undeniable driving force for our enterprise business that has grown at a rate few enterprise software businesses have.

2025-05-08 Apple exec testimony on search volume drop hurts Google stock price

2025-05-07 Here's our statement on this morning’s press reports about Search traffic.

We continue to see overall query growth in Search. That includes an increase in total queries coming from Apple’s devices and platforms.

It scales up to 9,216 liquid cooled chips linked with breakthrough Inter-Chip Interconnect (ICI) networking spanning nearly 10 MW.

When scaled to 9,216 chips per pod for a total of 42.5 Exaflops, Ironwood supports more than 24x the compute power of the world’s largest supercomputer – El Capitan – which offers just 1.7 Exaflops per pod.

High Bandwidth Memory (HBM) capacity: Ironwood offers 192 GB per chip, 6x that of Trillium.

HBM bandwidth: reaching 7.37 TB/s per chip, 4.5x of Trillium’s

Inter-Chip Interconnect (ICI) bandwidth. This has been increased to 1.2 TBps bidirectional, 1.5x of Trillium’s

Leading thinking models like Gemini 2.5 and the Nobel Prize winning AlphaFold all run on TPUs today,

Wiz’s solution rapidly scans the customer’s environment, constructing a comprehensive graph of code, cloud resources, services, and applications – along with the connections between them. It identifies potential attack paths, prioritizes the most critical risks based on their impact, and empowers enterprise developers to secure applications before deployment. It also helps security teams collaborate with developers to remediate risks in code or detect and block ongoing attacks.

Wiz is an innovative leader, creating new categories of cybersecurity solutions in the last 12 months, including code-to-cloud security and cloud-native runtime defense, further strengthening its impact.

This will help spur the adoption of multicloud cybersecurity, the use of multicloud, and competition and growth in cloud computing.

Five years ago, my fellow cofounders and I set out to create something security and development teams would love. We embarked on a significant mission: to help every organization secure everything they build and run in the cloud – any cloud.

We both also believe Wiz needs to remain a multicloud platform, so that across any cloud, we will continue to be a leading platform. We will still work closely with our great partners at AWS, Azure, Oracle, and across the entire industry.

Google LLC today announced it has signed a definitive agreement to acquire Wiz, Inc., a leading cloud security platform headquartered in New York, for $32 billion, subject to closing adjustments, in an all-cash transaction.

This acquisition represents an investment by Google Cloud to accelerate two large and growing trends in the AI era: improved cloud security and the ability to use multiple clouds (multicloud).

Wiz delivers an easy-to-use security platform that connects to all major clouds and code environments to help prevent cybersecurity incidents.

Wiz’s products will continue to work and be available across all major clouds, including Amazon Web Services, Microsoft Azure, and Oracle Cloud platforms, and will be offered to customers through an array of partner security solutions.

On Search, rich and multimodal experiences like AI Overviews, Circle to Search, and Lens give people new ways to express exactly what they want, more naturally than ever before. We already see more than 5 trillion searches on Google annually, and with AI, we're continuing to expand the types of questions that people can ask.

Quarterly Earnings

Q4 2025

Q3 2025

Q2 2025

With I/O in May we announced that we processed 480 trillion monthly tokens across our surfaces. Since then we have doubled that number now processing over 980 trillion monthly tokens.

The Gemini app now has more than 450 million monthly active users.

All queries and commercial queries on Search continue to grow year over year and our new AI experiences significantly contributed to this increase in usage. We are also seeing that our AI features cause users to search more as they learn that Search can meet more of their needs.

AI overviews are now driving over 10% more queries globally.

AI Mode is still rolling out but already has over 100 million monthly active users in the US and India.

The number of new GCP customers increased by nearly 28% quarter over quarter.

The 12% increase in search and other revenues as led by growth across all verticals with the largest contributions from retail and financial services.

Google lens searches are one of the fastest growing query types on search and grew 70% since this time last year. The majority of lens searches are incremental and we're seeing healthy growth for shopping queries using lens.

Circle to search, which is now in over 300 million Android devices. We've been adding capabilities to help people explore complex topics and ask follow up questions without switching apps. For example, gamers can now use circle to search while playing mobile games to see an AI overview or answers.

In the second quarter, depreciation increased $1.3 billion year-over-year to $5 billion, reflecting a growth rate of 35%. And the recent increase in CapEx investments, we expect the growth rate and depreciation to accelerate further in Q3.

Our full-stack approach, which combines AI infrastructure, AI research, and AI products and platforms, position us well to deliver new products and services across the company.

On your second question on AI mode versus Gemini standalone app, broadly, there are some use cases where you can get a great experience in both places. But there are use cases which are very specific.

I think where the queries are information oriented, but people really want to rely on the information. But I have the full power of AI. I think AI mode really shines in that. You can go there and you know it's backed up.The Gemini models are using search deeply as a tool. And so it's all grounded in that search experience. And I think users are responding very positively to it.

And there is a Gemini standalone app, you see everything from people can have a long conversation of chat just trying to pass time in the Gemini app. You've seen early cases where people may get into it in a therapy-like experience. So these are all emerging experiences of what people do.

Q1 2025

AI Overviews is going very well with over 1.5 billion users per month, and we’re excited by the early positive reaction to AI Mode.

In subscriptions, we surpassed 270 million paid subscriptions, with YouTube and Google One as key drivers.

In March we released AI Mode, an experiment in Labs. It expands what AI Overviews can do with more advanced reasoning, thinking and multimodal capabilities to help with questions that need further exploration and comparisons. On average, AI Mode queries are twice as long as traditional Search queries.

YouTube Music and Premium reached over 125 million subscribers, including trials, globally.

Waymo is now safely serving over a quarter of a million paid passenger trips each week. That’s up 5X from a year ago.

This past quarter, Waymo opened up paid service in Silicon Valley. Through our partnership with Uber, we expanded in Austin and are preparing for our public launch in Atlanta later this summer. We recently announced Washington, DC, as a future ride-hailing city, going live in 2026 alongside Miami.

Waymo continues progressing on two important capabilities for riders — airport access and freeway driving.

Google services revenues were $77 billion for the quarter, up 10% year on year, driven by strong growth in Search and YouTube, partially offset by year-on-year decline in network revenues. To add some further color to the performance, the 10% increase in Search and Other revenues was led by financial services, primarily due to strength in Insurance followed by Retail.

We continue to see a revenue mix shift with Google Search growth at double-digit levels, network revenues, which have much higher TAC rate, declined.

Sales and marketing expenses decreased 4%, primarily reflecting a decline in compensation expenses.

First, in terms of revenue, I'll highlight a couple of items that we mentioned last quarter that will have an impact on second quarter and 2025 revenue.

First, in Google services, advertising revenue in 2025 will be impacted by lapping the strength we experience in the financial service vertical Throughout 2024.

The first quarter, we saw 31% year-on-year growth in depreciation from the increase in technical infrastructure assets placed in service. Given the increase in CapEx investments over the past few years, we expect the growth rate in depreciation to accelerate throughout 2025.

Second, as we've previously said, we expect some headcount growth in 2025 in key investment area. As we've disclosed previously, due to a shift in the timing of our annual employee stock-based compensation award beginning in 2023, our first quarter stock-based comp expenses is relatively lower compared to the remaining quarters of the year.

Search was led again, by finance due primarily to ongoing strength in the insurance, retail, health care, and travel were actually also sizable contributors here to growth.

At the end of 2024. And we're still focused on driving efficiency and productivity throughout the organization.

We're focusing on continue to moderate the pace of compensation growth, looking at our real estate footprint, and, again, the build-out and utilization of our technical infrastructure across the business.

Q4 2024

Q3 2024

Q2 2024

Q1 2024

And now we are starting to bring AI overviews to the main Search page. We are being measured in how we do this, focusing on areas where gen AI can improve the search experience while also prioritizing traffic to websites and merchants.

We also remain focused on long-term efforts to durably reengineer our cost base. You can see the impact of this work reflected in our operating margin improvement. We continue to manage our headcount growth and align teams with our highest priority areas. Beyond our teams, we are very focused on our cost structures, procurement, and efficiency.

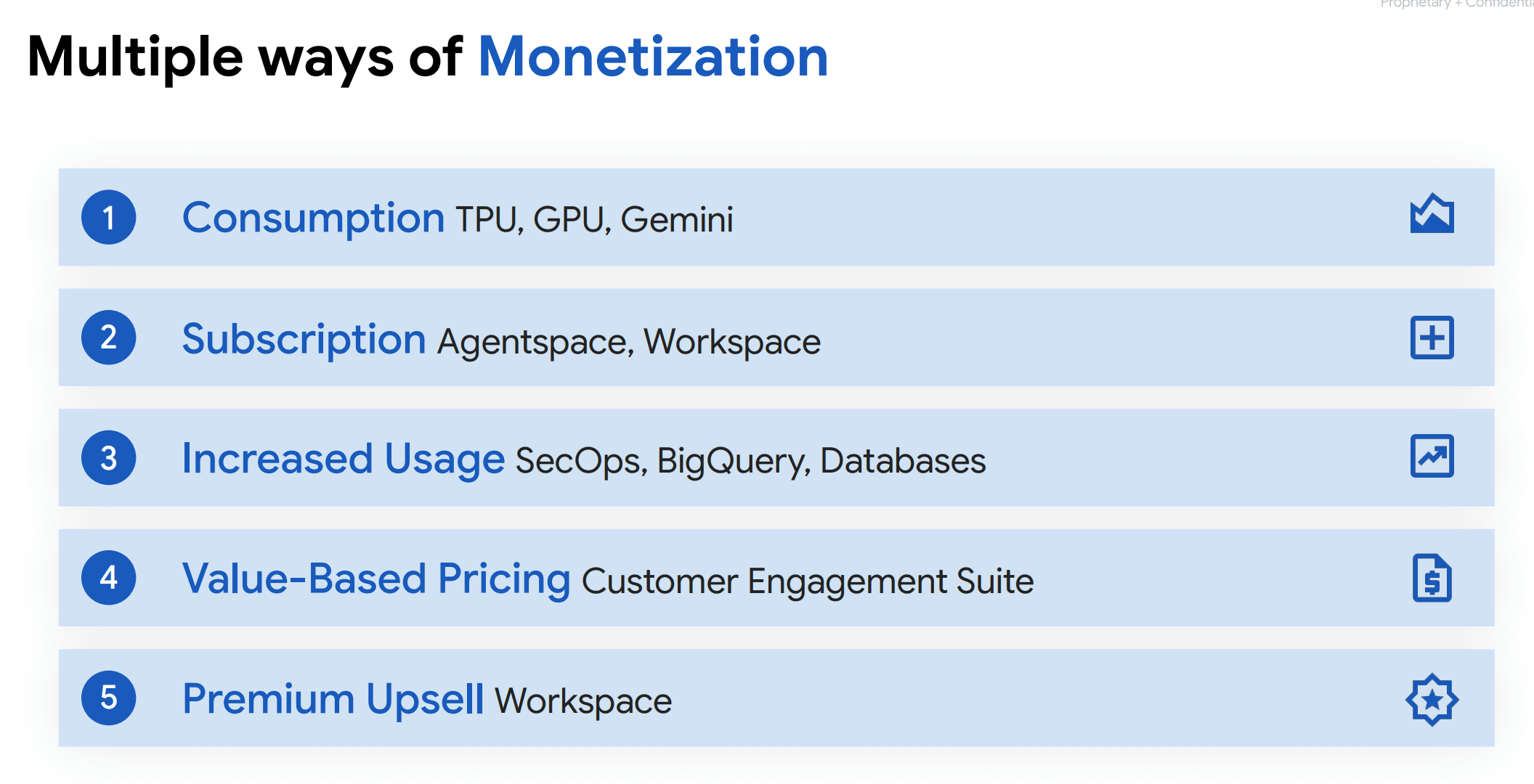

Finally, our monetization path. We have clear paths to AI monetization through Ads and Cloud as well as subscriptions.

Today, more than 60% of funded gen AI start-ups and nearly 90% of gen AI unicorns are Google Cloud customers.

And on subscriptions, which are increasingly important for YouTube, we announced that in Q1, YouTube surpassed 100 million Music and Premium subscribers globally, including trialers.

Waymo's fully autonomous service continues to grow ridership in San Francisco and Phoenix with high customer satisfaction, and we started offering paid rides in Los Angeles and testing rider-only trips in Austin.

Q4 2023

- Alphabet Announces Fourth Quarter and Fiscal Year 2023 Results

- Alphabet Inc. (GOOG) Q4 2023 Earnings Call Transcript

- [2022 Alphabet Annual Report (PDF)]

Q3 2023

Q2 2023

Q1 2023

Q4 2022

- Alphabet Announces Fourth Quarter and Fiscal Year 2022 Results

- Alphabet Inc. (GOOG) Q4 2022 Earnings Call Transcript

- [2022 Alphabet Annual Report (PDF)]

Q3 2022

Q2 2022

Q1 2022

Q4 2021

- Alphabet Announces Fourth Quarter and Fiscal Year 2021 Results

- Alphabet Inc. (GOOG) Q4 2021 Earnings Call Transcript

- 2021 Alphabet Annual Report (PDF)