Appearance

Nvidia

Nvidia Investor Relations

Investment Articles

Today, Groq announced that it has entered into a non-exclusive licensing agreement with Nvidia for Groq’s inference technology.

As part of this agreement, Jonathan Ross, Groq’s Founder, Sunny Madra, Groq’s President, and other members of the Groq team will join Nvidia to help advance and scale the licensed technology.

Groq will continue to operate as an independent company with Simon Edwards stepping into the role of Chief Executive Officer.

Two hours south of Jeddah, on Saudi Arabia’s Red Sea coast, the Al Shuaiba solar farm blankets 50 square kilometres of desert. The first phase of the project, started in 2024, produces 600 megawatts of electricity at just 3.9 Saudi halalas (just over a cent) per kilowatt-hour, nearly a twentieth of the cost of generation at Britain’s planned Hinkley Point C nuclear power plant. Saudi Arabia’s plan for all this cheap electricity is to power enormous data centres for artificial intelligence (AI).

The cost of inference, the process of querying and getting answers from an AI system, is made up of two things—the fixed cost of computer hardware, and the ongoing cost of the electricity to run it. Cutting corners on hardware is a false economy, since the newest and most expensive chips are usually more efficient at running the best algorithms. Offering cheaper AI systems, therefore, comes down to using cheaper electricity. On that, Saudi Arabia reckons it has the edge.

This strategy became a national priority in May and is backed by the state’s defacto ruler, Muhammad bin Salman, known as MBS. A new company, Humain, has centralised the efforts under the leadership of Tareq Amin, boss of Aramco Digital, the tech arm of the state-owned energy company. “We hit the ground sprinting, not just walking,” Mr Amin says.

Humain’s mission is wrapped up in Saudi Arabia’s wider “Vision 2030” strategy, a goal for pivoting the country away from its dependence on extracting fossil fuels. Executing the overall vision within the constraint available is “the number one risk”, says Mr Amin. “We have no choice. We have to do this, there is no plan B.” Born in Jordan, Mr Amin has taken on big challenges before, having worked on infrastructure projects for Reliance Jio, an Indian telecoms company, and Rakuten, a Japanese conglomerate.

The conditions seem favourable. Data centres need power to run on, land to sit on and chips to fill them. The first is Saudi Arabia’s strength. The second, too, is easy to obtain. The country is large and sparsely populated, and with government backing, the permits to build are easy to obtain. In its first two weeks, Mr Amin says, Humain found more than 200 potential sites with access to a combined 15.6 gigawatts of energy supply, including four large plots situated next to sufficient solar power.

Chips have been trickier. The state’s AI data-centre journey began with a deal between Aramco Digital and Groq, an AI chip company (not the xAI model with a similar name), obtaining $1.5bn of the company’s semiconductors in February. Those chips are specifically designed for inference workloads, which has made them unappealing for many large AI labs, which value flexibility between training and running models. But these chips are well suited to reducing the cost of using models by making it cheaper to export tokens, the fundamental unit of AI use.

A token is just a fragment of a word. Most commercial AI products charge a fee for every token used in a query ($1.25 per million for OpenAI’s GPT-5, for instance), and a separate fee for every token produced in the output ($10 per million). Humain’s offer to AI companies is simple: run those AI models on Saudi electricity, and produce the output tokens for much less than customers are billed. With cheap power and efficient chips, Humain was able to sell output tokens for around half market price, Mr Amin says.

In November Humain secured the most cutting-edge chips. A visit to America by MBS—also the chair of Humain, and whose face sits at the top of its website—included a chummy meeting with Donald Trump, which unlocked a licence to import 35,000 top-flight chips from Nvidia, costing around $1bn. That is not enough to fill more than a single data centre for the types of big AI companies Humain wants to provide services to, but it represents a stark reversal on earlier American attempts to keep the most valuable AI computing hardware available only to the country’s closest allies. Shortly before, AirTrunk, a data-centre builder, had signed a $3bn deal with Humain to build a data-centre campus in the country.

Saudi Arabia is not only making data centres, it is also using them. ALLAM, an Arabic-language AI model built with the Saudi Data & AI Authority (SDAIA), another wing of the state, has been provided to civil servants. Humain has also signed deals with firms like Adobe to have this model incorporated in their applications.

Such partnerships suggest Saudi Arabia is on the right track to seeding a viable AI sector, says Derar Saifan, a partner at PwC, a consultancy. He expects to see the country break into the top five of global AI hubs in the next five to seven years.

The early successes have raised Humain’s ambition further. Mr Amin now talks not only of exporting tokens or training models, but of building a “world-first AI operating system for the enterprise”, a direct competitor to Microsoft Windows where human resources, finance and legal departments are replaced by AI agents and the interface is built around prompting chatbots rather than clicking on icons. It’s a bold, potentially quixotic, vision. “I cannot slip my timelines, and that’s what keeps me awake,” says Mr Amin. “I’m not underestimating the task.”

- 2025-12-01 NVIDIA and Synopsys Announce Strategic Partnership to Revolutionize Engineering and Design

NVIDIA invested $2 billion in Synopsys(SNPS) common stock at a purchase price of $414.79 per share.

- 2025-11-24 'Big Short' investor Michael Burry just launched a Substack and took aim at Nvidia in his first post

At the turn of this century, Burry writes, the Nasdaq was driven by "highly profitable large caps, among which were the so-called 'Four Horsemen' of the era — Microsoft, Intel, Dell, and Cisco."

He writes that a key issue with the dot-com bubble was "catastrophically overbuilt supply and nowhere near enough demand," before adding that it's "just not so different this time, try as so many might do to make it so."

Burry calls out the "five public horsemen of today's AI boom — Microsoft, Google, Meta, Amazon and Oracle" along with "several adolescent startups" including Sam Altman's OpenAI.

"And once again there is a Cisco at the center of it all, with the picks and shovels for all and the expansive vision to go with it," Burry writes, after noting the internet-networking giant's stock plunged by over 75% during the dot-com crash. "Its name is Nvidia."

"If you go around popping a lot of balloons, you are not going to be the most popular fellow in the room."

- 2025-11-24 Three big holes in Nvidia's safety net

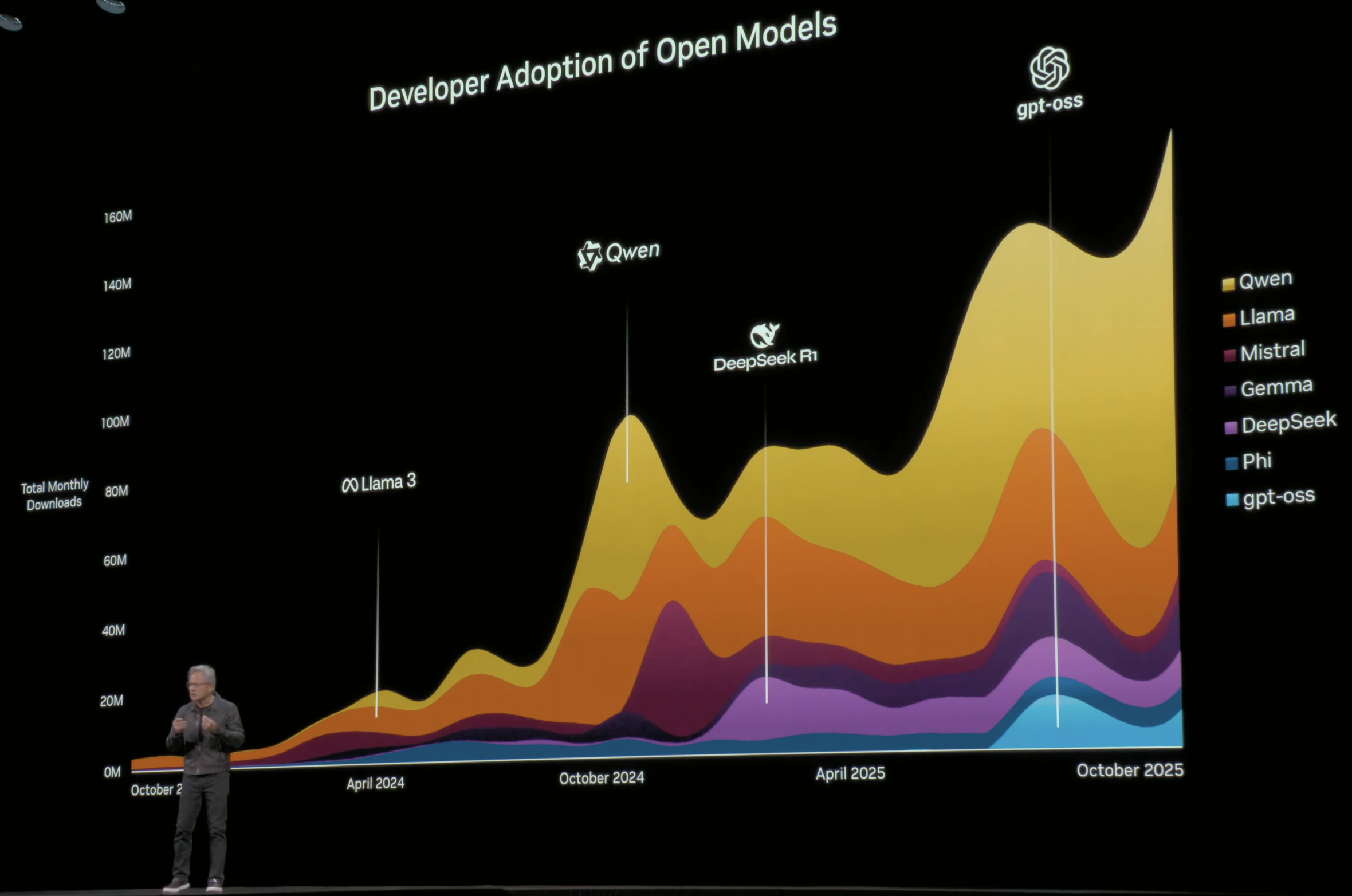

As for Nvidia, demand for its products must remain red-hot to sustain its valuations. That means leaning even harder on the network effect that brought it this far. But it can’t grow in perpetuity, even as its dominance currently seems impenetrable. The risks to its position could emerge from any number of directions, and none should be dismissed outright.

First, the AI boom could cool, deflating demand as quickly as it was inflated. Second, a competitor could notch a real technological leap, narrowing Nvidia’s lead. And perhaps most plausibly, one of Nvidia’s own customers could engineer its way out of dependence on the company’s semiconductors. This risk grows as hyperscalers pour billions into in-house designs.

One complaint about the dominance of the Magnificent Seven stocks has always been that they compete with each other, yet they are priced on the implicit assumption that they’ll all win equally.

Look to Google’s rollout of its flagship Gemini 3 last week. It’s widely viewed as the most capable AI model yet, but what’s especially notable is how it was built. Google trained the model entirely on its own Tensor Processing Units (TPUs), rather than Nvidia GPUs, which have become the industry’s default.

2025-11-22 How Nvidia GPUs Compare To Google’s And Amazon’s AI Chips

2025-11-19 Elon Musk’s xAI will be first customer for Nvidia-backed data center in Saudi Arabia

2025-11-19 Why Nvidia Stock Could Reach a $20 Trillion Market Cap by 2030

2025-10-28 NVIDIA GTC Washington, DC: Live Updates on What’s Next in AI

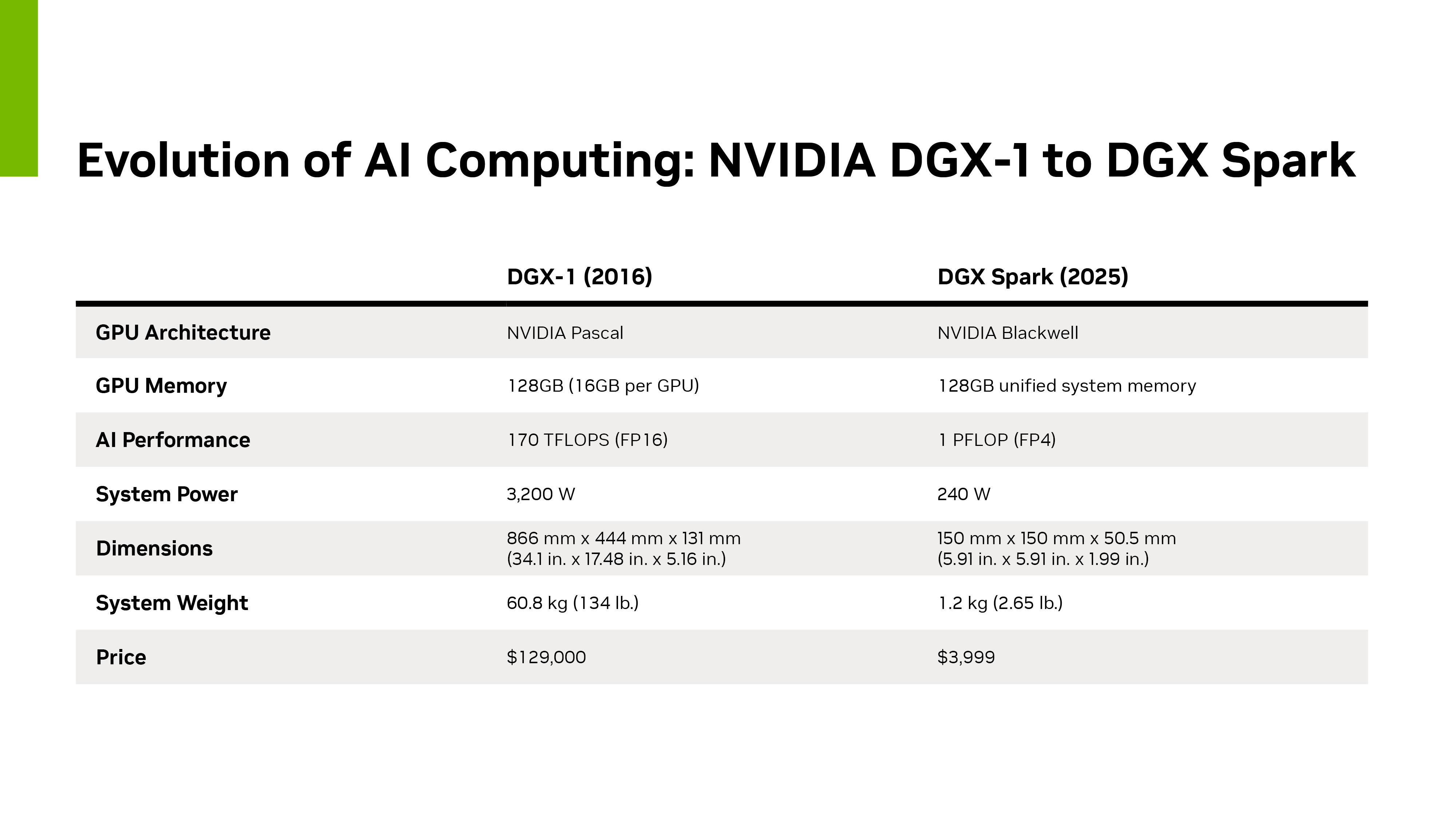

As a new class of computer, DGX Spark delivers a petaflop of AI performance and 128GB of unified memory in a compact desktop form factor, giving developers the power to run inference on AI models with up to 200 billion parameters and fine-tune models of up to 70 billion parameters locally. In addition, DGX Spark lets developers create AI agents and run advanced software stacks locally.

“In 2016, we built DGX-1 to give AI researchers their own supercomputer. I hand-delivered the first system to Elon at a small startup called OpenAI — and from it came ChatGPT, kickstarting the AI revolution,” said Jensen Huang, founder and CEO of NVIDIA. “DGX-1 launched the era of AI supercomputers and unlocked the scaling laws that drive modern AI. With DGX Spark, we return to that mission — placing an AI computer in the hands of every developer to ignite the next wave of breakthroughs.”

DGX Spark brings together the full NVIDIA AI platform — including GPUs, CPUs, networking, CUDA® libraries and the NVIDIA AI software stack — into a system small enough for a lab or an office, yet powerful enough to accelerate agentic and physical AI development. By combining breakthrough performance with the reach of the NVIDIA ecosystem, DGX Spark transforms the desktop into an AI development platform.

DGX Spark systems deliver up to 1 petaflop of AI performance, accelerated by a NVIDIA GB10 Grace Blackwell Superchip, NVIDIA ConnectX®-7 200 Gb/s networking and NVIDIA NVLink™-C2C technology, providing 5x the bandwidth of fifth-generation PCIe with 128GB of CPU-GPU coherent memory.

To celebrate DGX Spark shipping worldwide, Huang hand-delivered one of the first units of DGX Spark to Elon Musk, chief engineer at SpaceX, today in Starbase, Texas. The exchange was a connection to the supercomputer’s origins, as Musk was among the team that received the first NVIDIA DGX™-1 supercomputer from Huang in 2016.

- 2025-10 NVIDIA Non-Deal Roadshow

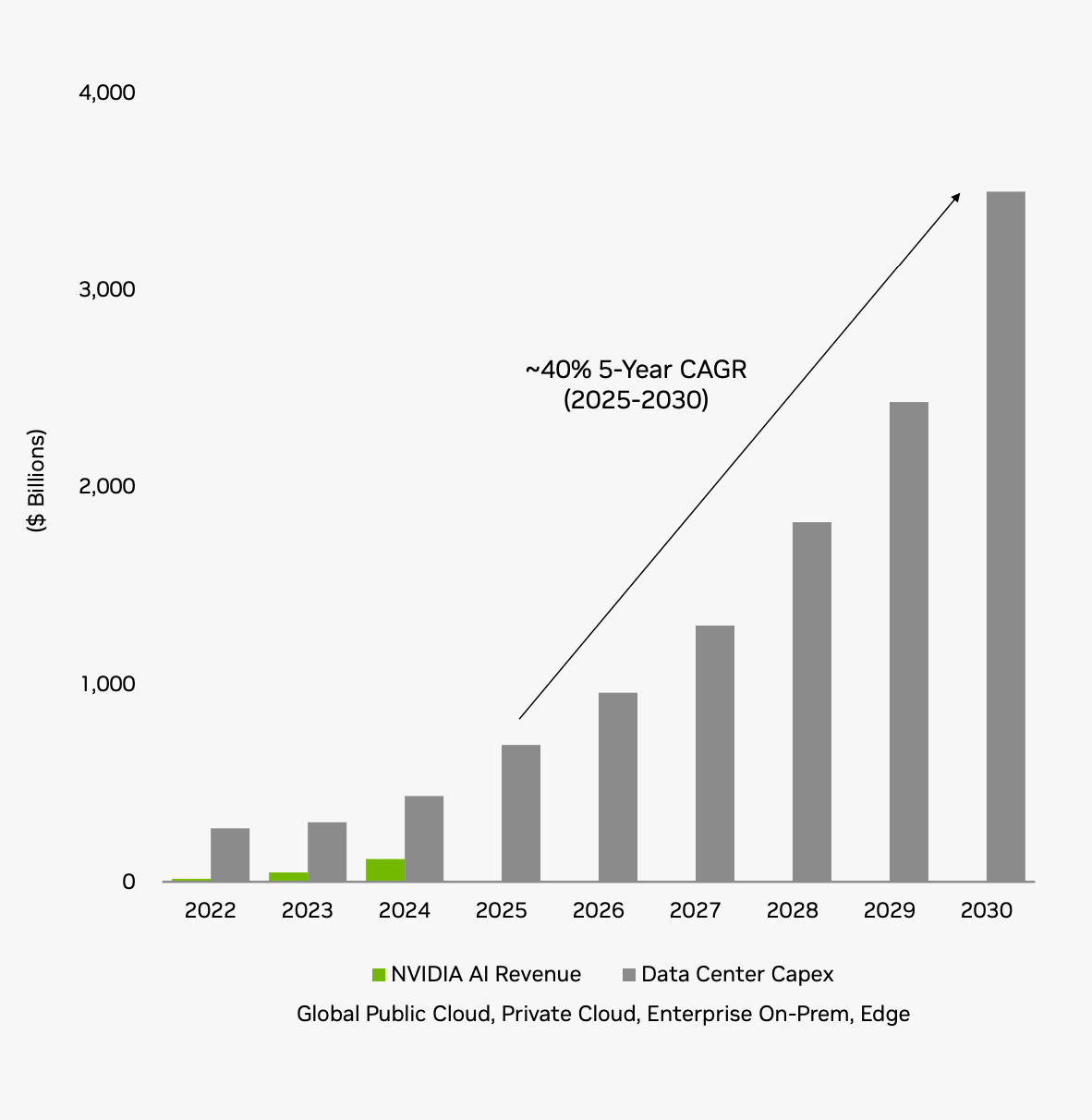

$3-4 Trillion AI Infrastructure Spend by 2030

Key TAM Growth Drivers

- End of Moore’s Law drives fundamental shift from general-purpose to accelerated computing

- Hyperscale shift to Generative AI

- Model Makers — A new industry

- Enterprise — Agentic AI enters the labor market

- Robotics, AV, Factories, and Edge powered by Physical AI

Token Generation is Doubling Every Two Months

- ChatGPT is at ~700M WAUs, with usage up ~4X y/y

- OpenAI now counts 5M paying business users, up from 3M in June

- Microsoft processed over 500 trillion tokens served by Foundry APIs in FY2025, up 7X y/y

- Alphabet processed over 980 trillion tokens in the month of June across its AI services, up from a monthly run-rate of 480 trillion tokens in May

- The Gemini app had more than 450M MAUs as of the end of July with daily requests up >50% in Q2 vs. Q1

NVIDIA and OpenAI Partnership

Multi-Year, Multi-Generational Build Out of at Least 10 Gigawatts

- The OpenAI partnership is a powerful demonstration of NVIDIA’s ability as an AI infrastructure partner – delivering architecture, chips, systems, networking, data centers, software, operations, and financing as one integrated solution.

- The partnership extends existing collaboration to scale multi-giga-watts of infrastructure at Microsoft, OCI, and CoreWeave.

- In addition to CSP capacity, OpenAI and NVIDIA will build at least 10 gigawatts – millions of GPUs – of infrastructure to be operated by OpenAI; The first gigawatt launches in 2026 on the Vera Rubin platform

- For the first time, OpenAI will buy directly from NVIDIA, secure multi-cycle supply, forging close engineering collaboration to build AI factories

- NVIDIA intends to invest in increments over time up to $100 billion in equity; Every 1GW build-out will require $50-60 billion in total spend. OpenAI will need to re-invest future revenue and/or secure other sources of financing to cover the total cost of their build outs

He could finance a few startups or lean on his favorite tool, CoreWeave, to fill in whatever was missing, especially during transition periods between GPU generations.

Companies are running out of funds. Big tech capex is already starting to worry investors, and what remains is increasingly diverted into data-center infrastructure like energy, construction, or building in-house chips. ROI so far has been nonexistent. Startups, meanwhile, are forced to raise fresh capital every few months.

Even the largest labs, like xAI, are raising money, debts, and loans more frequently. Meta itself has been going full leveraged up, its cash levels now resemble the difficult year of 2022.

Big Buybacks – Nvidia announced the largest buyback in its history: $55 billion.

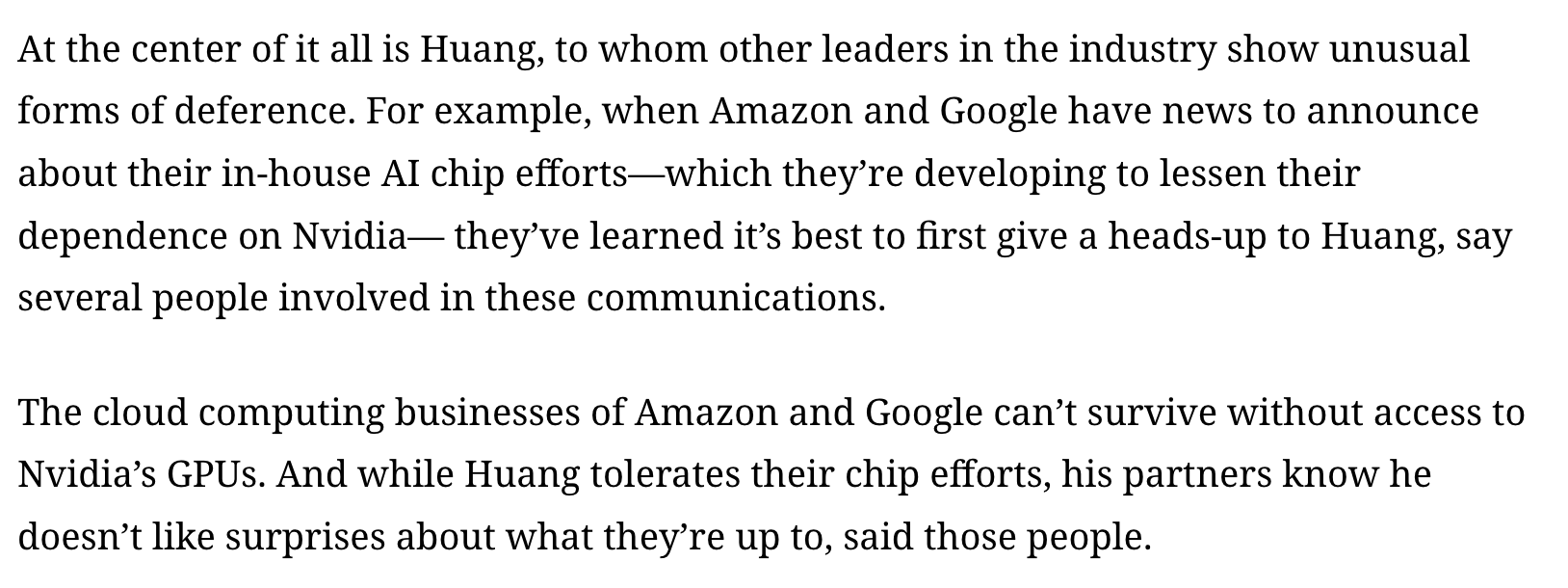

Antitrust-Style Pressure – Huang has tightened threats and monitoring against clients. Want chips? Want priority? Fine - but on his conditions. Tell me what you’re developing. Order a fixed amount of chips. Don’t use AMD and etc.

- 2025-09-28 Jensen running the whole game like a gangsta

2025-09-26 NVIDIA: OpenAI, Future of Compute, and the American Dream | BG2 w/ Bill Gurley and Brad Gerstner

2025-09-23 Sam Altman - Abundant Intelligence

If AI stays on the trajectory that we think it will, then amazing things will be possible. Maybe with 10 gigawatts of compute, AI can figure out how to cure cancer. Or with 10 gigawatts of compute, AI can figure out how to provide customized tutoring to every student on earth. If we are limited by compute, we’ll have to choose which one to prioritize; no one wants to make that choice, so let’s go build.

Our vision is simple: we want to create a factory that can produce a gigawatt of new AI infrastructure every week.

Over the next couple of months, we’ll be talking about some of our plans and the partners we are working with to make this a reality. Later this year, we’ll talk about how we are financing it; given how increasing compute is the literal key to increasing revenue, we have some interesting new ideas.

- 2025-09-22 OpenAI and NVIDIA Announce Strategic Partnership to Deploy 10 Gigawatts of NVIDIA Systems

OpenAI and NVIDIA today announced a letter of intent for a landmark strategic partnership to deploy at least 10 gigawatts of NVIDIA systems for OpenAI’s next-generation AI infrastructure to train and run its next generation of models on the path to deploying superintelligence. To support this deployment including data center and power capacity, NVIDIA intends to invest up to $100 billion in OpenAI as the new NVIDIA systems are deployed. The first phase is targeted to come online in the second half of 2026 using the NVIDIA Vera Rubin platform.

This partnership complements the deep work OpenAI and NVIDIA are already doing with a broad network of collaborators, including Microsoft, Oracle, SoftBank and Stargate partners, focused on building the world’s most advanced AI infrastructure.

OpenAI has grown to over 700 million weekly active users and strong adoption across global enterprises, small businesses and developers. This partnership will help OpenAI advance its mission to build artificial general intelligence that benefits all of humanity.

- 2025-09-18 Nvidia just spent over $900 million to hire Enfabrica CEO, license AI startup’s technology

Nvidia has just shelled out over $900 million to hire Enfabrica CEO Rochan Sankar and other employees at the artificial intelligence hardware startup, and to license the company’s technology, CNBC has learned.

Enfabrica, founded in 2019, says its technology can connect more than 100,000 GPUs together. It’s a solution that could help Nvidia offer integrated systems around its chips so clusters can effectively serve as a single computer.

While Nvidia’s earlier AI chips like the A100 were single processors slotted into servers, its most recent products come in tall racks with 72 GPUs installed working together.

Nvidia previously invested in Enfabrica as part of a $125 million Series B round in 2023 that was led by Atreides Management.

Late last year, Enfabrica raised another $115 million from investors including Spark Capital, Arm, Samsung and Cisco . According to PitchBook, the post-money valuation was about $600 million.

The companies will focus on seamlessly connecting NVIDIA and Intel architectures using NVIDIA NVLink — integrating the strengths of NVIDIA’s AI and accelerated computing with Intel’s leading CPU technologies and x86 ecosystem to deliver cutting-edge solutions for customers.

For data centers, Intel will build NVIDIA-custom x86 CPUs that NVIDIA will integrate into its AI infrastructure platforms and offer to the market.

For personal computing, Intel will build and offer to the market x86 system-on-chips (SOCs) that integrate NVIDIA RTX GPU chiplets. These new x86 RTX SOCs will power a wide range of PCs that demand integration of world-class CPUs and GPUs.

NVIDIA will invest $5 billion in Intel’s common stock at a purchase price of $23.28 per share. The investment is subject to customary closing conditions, including required regulatory approvals.

- 2025-09-10 Nvidia cloud credit commitments

From my tracking over the past two years, Nvidia has been accumulating cloud credit deals from its clients since 2022. Before Lambda, CoreWeave’s new order, and Oracle’s deal, Nvidia was already sitting on more than $12B in cloud credit commitments across its clients. This suggests that, if fully reported, Nvidia’s total cloud credit commitments could reach around $20B as of Q3 across all clients.

The entire industry, led by Nvidia, is pushing hard on cloud credits to keep the party going and to create artificial demand through barter transactions.

Nvidia has recently made numerous commitments:

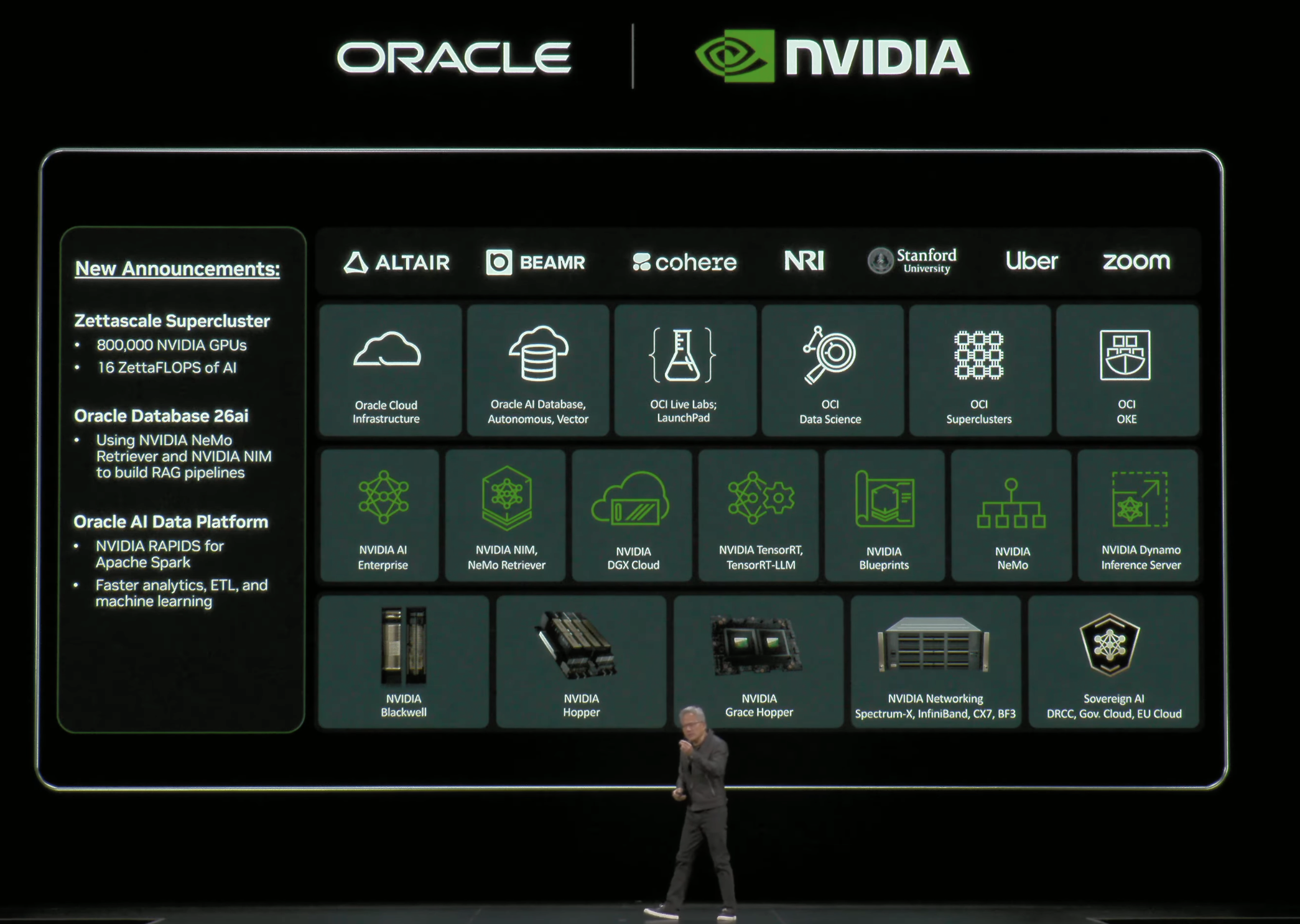

- Oracle Cloud credit deal

- CoreWeave Cloud credit deal

- Lambda Cloud credit deal

- $12B worth of cloud credit commitments across clients

- Purchase of $5B in Intel shares and a commitment to buying x86 products

- $50B buyback program

- A pledge to invest up to $500B in the U.S. over the next four years

- TDP = Thermal Design Power, 热设计功耗

- CoWoS-L(Chip on Wafer on Substrate - Local Silicon Interconnect,TSMC 的先进 2.5D 封装技术变体)

- FC-BGA(Flip Chip - Ball Grid Array,翻转芯片球栅阵列,传统封装技术)

- HGX: High-Performance Computing GPU eXchange 高性能计算平台

PD ratio: prefill to decode instance ratio.

Serving an LLM request involves two phases: the prefill phase and decode phase.

- In the prefill phase, the LLM generates the first token from the user prompt. This phase affects the time-to-first token (TTFT), and it is usually compute-bounded, under-utilizing the memory bandwidth.

- On the other hand, the decode phase generates a new token while loading the previous tokens from the KV cache. This phase affects time-per-output-token (TPOT), and it is always memory-bounded, under-utilizing the compute.

The key difference between AMD and the aforementioned providers is AMD’s lack of robust internal workloads to provide the revenue and demand backstop for yet another chip development project just to keep up.

If the MI400’s FP4 effective dense FLOPS turns out to be on par with or lower than the VR200 NVL144, AMD will effectively show up later than Nvidia to market with a carbon copy of the VR200 NVL144. Meanwhile, Nvidia will yet again pull ahead as the VR200 CPX NVL144 delivers better performance per TCO for long context lengths. AMD will then have to wait again until 2027 to catch up.

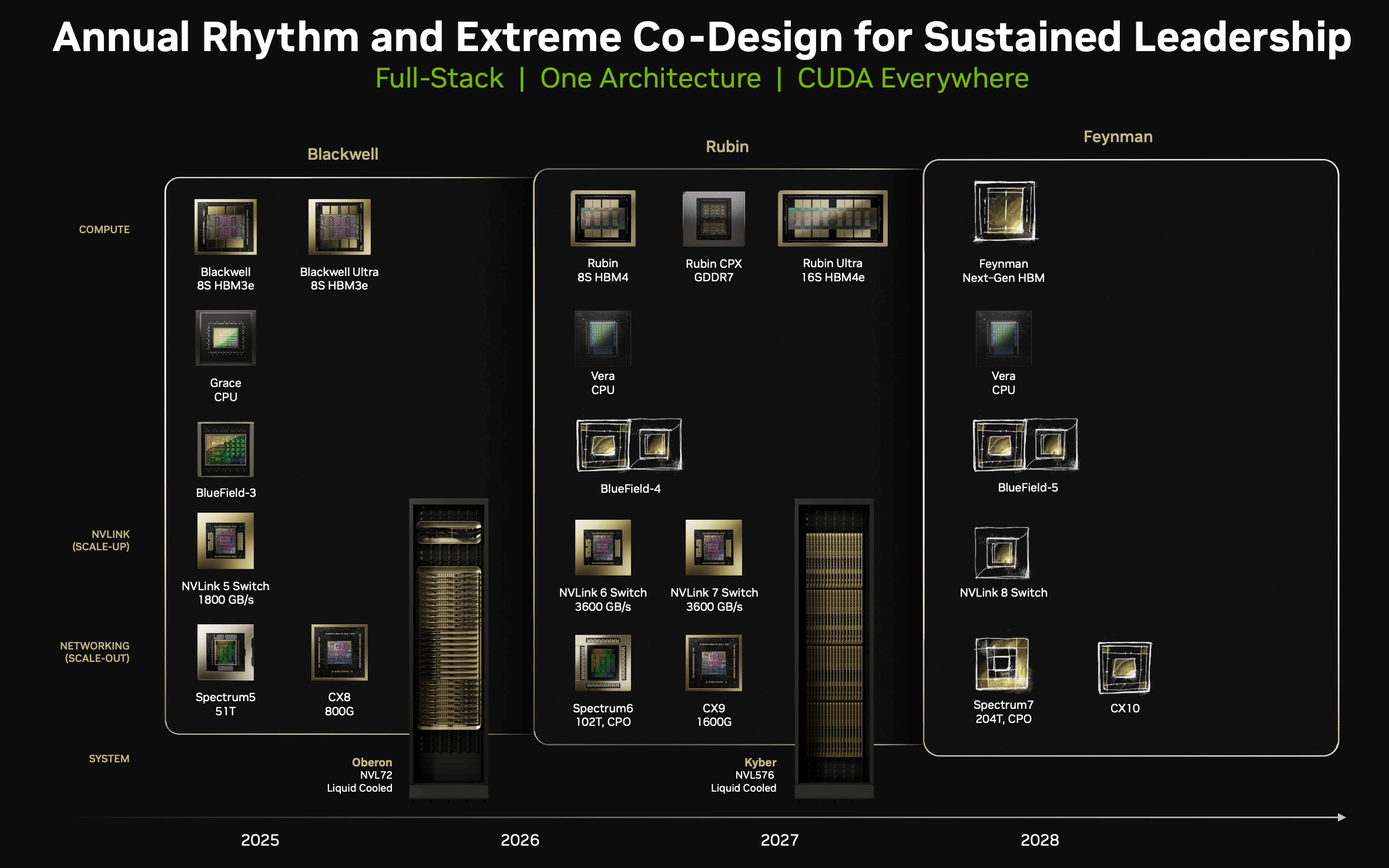

The NVIDIA Rubin CPX GPU is purpose-built to handle million-token coding and generative video applications.

The NVIDIA Vera Rubin NVL144 CPX platform packs 8 exaflops of AI performance and 100TB of fast memory in a single rack.

AI innovators like Cursor, Runway and Magic are exploring how Rubin CPX can accelerate their applications.

NVIDIA Rubin CPX enables the highest performance and token revenue for long-context processing — far beyond what today’s systems were designed to handle. This transforms AI coding assistants from simple code-generation tools into sophisticated systems that can comprehend and optimize large-scale software projects.

To process video, AI models can take up to 1 million tokens for an hour of content, pushing the limits of traditional GPU compute. Rubin CPX integrates video decoder and encoders, as well as long-context inference processing, in a single chip for unprecedented capabilities in long-format applications such as video search and high-quality generative video.

Rubin CPX delivers up to 30 petaflops of compute with NVFP4 precision for the highest performance and accuracy. It features 128GB of cost-efficient GDDR7 memory to accelerate the most demanding context-based workloads. In addition, it delivers 3x faster attention capabilities compared with NVIDIA GB300 NVL72 systems — boosting an AI model’s ability to process longer context sequences without a drop in speed.

Vera Rubin NVL144 CPX enables companies to monetize at an unprecedented scale, with $5 billion in token revenue for every $100 million invested.

NVIDIA Rubin CPX will be supported by the complete NVIDIA AI stack — from accelerated infrastructure to enterprise‑ready software.

NVIDIA Rubin CPX is expected to be available at the end of 2026.

Nvidia will continue to be the leader, yet the 92% market share the GPU-leader commands today will erode over the next few years as inference is an easier market for a few select, strong competitors to rival Nvidia.

However, this part is important: Nvidia does not need a monopoly at 92% on AI accelerators to extend its stock gains. The company has an outsized opportunity with AI software including autonomous vehicles.

On April 9, 2025, the U.S. government, or USG, informed NVIDIA Corporation, or the Company, that the USG requires a license for export to China (including Hong Kong and Macau) and D:5 countries, or to companies headquartered or with an ultimate parent therein, of the Company’s H20 integrated circuits and any other circuits achieving the H20’s memory bandwidth, interconnect bandwidth, or combination thereof. The USG indicated that the license requirement addresses the risk that the covered products may be used in, or diverted to, a supercomputer in China. On April 14, 2025, the USG informed the Company that the license requirement will be in effect for the indefinite future.

The Company’s first quarter of fiscal year 2026 ends on April 27, 2025. First quarter results are expected to include up to approximately $5.5 billion of charges associated with H20 products for inventory, purchase commitments, and related reserves.

Country Group D:5: Countries subject to U.S. arms embargoes are identified by the State Department through notices published in the Federal Register.

NVIDIA Blackwell chips have started production at TSMC’s chip plants in Phoenix, Arizona. NVIDIA is building supercomputer manufacturing plants in Texas, with Foxconn in Houston and with Wistron in Dallas. Mass production at both plants is expected to ramp up in the next 12-15 months.

The AI chip and supercomputer supply chain is complex and demands the most advanced manufacturing, packaging, assembly and test technologies. NVIDIA is partnering with Amkor and SPIL for packaging and testing operations in Arizona.

Within the next four years, NVIDIA plans to produce up to half a trillion dollars of AI infrastructure in the United States through partnerships with TSMC, Foxconn, Wistron, Amkor and SPIL.

2025-03-26 The GPU Cloud ClusterMAX™ Rating System

2025-03-10 The Current State of AI Markets

2025-02-23 Nvidia Suppliers Send Mixed Signals for Delays on GB200 Systems – What It Means for NVDA Stock

Ultimately, my firm trimmed our Nvidia position (to a 10% allocation) and will happily buy lower should the assumptions in this analysis materialize.

- 2025-02-13 Datacenter Anatomy Part 2 – Cooling Systems

- 2025-01-02 Where I Plan To Buy Nvidia Stock Next

Nvidia is in a much more complex 4th wave. If this is playing out, NVDA would see the $116 level break, which opens the door to a potential low at $101, $90, or $78.

- 2024-10-14 Datacenter Anatomy Part 1: Electrical Systems

- 2025-01-31 DeepSeek Creates Buying Opportunity for Nvidia Stock

- 2025-01-25 The Short Case for Nvidia Stock

- 2025-01-12 DeepSeek创始人专访:中国的AI不可能永远跟随,需要有人站到技术的前沿

- 2024-12-09 A Deep Dive on the Power Law

- 2024-10-20 AI Data Centers, Part 2: Energy

- 2024-08-30 Here's A Reality Check On AMD Vs. Nvidia That May Surprise You (AMD Upgrade)

- 2024-08-23 Nvidia: Buy Any Dip Before The Earnings Report

- 2024-07-12 Downgrade Alert: AMD's Uphill Battle Against Nvidia Is Getting Much Steeper

- 2024-07-08 Nvidia: Upside Potential Is Greater Than You May Think

- 2023-05-24 疯狂的幻方:一家隐形AI巨头的大模型之路

- Sam Altman Blog

- 2019-03-11 NVIDIA to Acquire Mellanox for $6.9 Billion

NVIDIA and Mellanox today announced that the companies have reached a definitive agreement under which NVIDIA will acquire Mellanox. Pursuant to the agreement, NVIDIA will acquire all of the issued and outstanding common shares of Mellanox for $125 per share in cash, representing a total enterprise value of approximately $6.9 billion.

Jensen Huang

NVIDIA is the largest install base of video game architecture in the world. GeForce is some 300 million gamers in the world, still growing incredibly well, super vibrant.

We created a library called cuDNN. cuDNN is the world's first neural network computing library. And so we have cuDNN, we have cuOpt for combinatory optimization, we have cuQuantum for quantum simulation and emulation, all kinds of different libraries, cuDF for data frame processing ...

And so we just did it one domain after another domain after another domain. We have a rich library for self-driving cars. We have a fantastic library for robotics, incredible library for virtual screening, whether it's physics based virtual screening or neural network based virtual screen, incredible library for climate tech. And so one domain after another domain. And so we have to go meet friends and create the market.

There are two things that are happening at the same time, and it gets conflated, and it's helpful to tease apart. So the first thing, let's start with a condition where there's no AI at all. Well, in a world where there's no AI at all, general purpose computing has run out of steam still.

...

And so the first thing that's going to happen is the world's trillion dollars of general purpose data centers are going to get modernized into accelerated computing. That's going to happen no matter what. And the reason for that is, as I described, Moore's Law is over.

...

And now, what's amazing is, so the first trillion dollars of data centers is going to get accelerated and invented this new type of software called Generative AI. This Generative AI is not just a tool, it is a skill. And so this is the interesting thing. This is why a new industry has been created.

...

For the very first time, we're going to create skills that augment people. And so that's why people think that AI is going to expand beyond the trillion dollars of data centers and IT, and into the world of skills. So what's a skill? A digital chauffeur is a skill, autonomous, a digital assembly line worker, robot, a digital customer service, chatbot, digital employee for planning NVIDIA's supply chain ...

There's not one software engineer in our company today who don't use code generators either the ones that we built ourselves for CUDA or USD, which is another language that we use in the company, or Verilog, or C and C++ and code generation. And so I think the days of every line of code being written by software engineers, those are completely over. And the idea that every one of our software engineers would essentially have companion digital engineers working with them 24/7, that's the future. And so the way I look at NVIDIA, we have 32,000 employees. Those 32,000 employees are surrounded by hopefully 100x more digital engineers.

And so the amazing thing is, when you want to build this AI computer, people say words like super-cluster, infrastructure, supercomputer, for good reason because it's not a chip, it's not a computer per se. And so we're building entire data centers. By building the entire data center, if you just ever look at one of these superclusters, imagine the software that has to go into it to run it. There is no Microsoft Windows for it. Those days are over. So all the software that's inside that computer is completely bespoke. Somebody has to go write that. So the person who designs the chip and the company that designs that supercomputer, that supercluster and all the software that goes into it, it makes sense that it's the same company because it will be more optimized, they'll be more performant, more energy efficient, more cost effective. And so that's the first thing.

The second thing is, AI is about algorithms. And we're really, really good at understanding what is the algorithm, what's the implication to the computing stack underneath and how do I distribute this computation across millions of processors, run it for days, with the computer being as resilient as possible, achieving great energy efficiency, getting the job done as fast as possible, so on and so forth. And so we're really, really good at that.

And then lastly, in the end, AI is computing. AI is software running on computers. And we know that the most important thing for computers is install base, having the same architecture across every cloud across on-prem to cloud, and having the same architecture available, whether you're building it in the cloud, in your own supercomputer, or trying to run it in your car or some robot or some PC, having that same identical architecture that runs all the same software is a big deal. It's called install base. And so the discipline that we've had for the last 30 years has really led to today. And it's the reason why the most obvious architecture to use if you were to start a company is to use NVIDIA's architecture. Because we're in every cloud, we're anywhere you like to buy it. And whatever computer you pick up, so long as it says NVIDIA inside, you know you can take the software and run it.

GTC(GPU Technology Conference)

GTC 2025

GTC is coming back to San Jose on March 17–21, 2025.

Nvidia Quarterly Earnings

Q3 FY2026

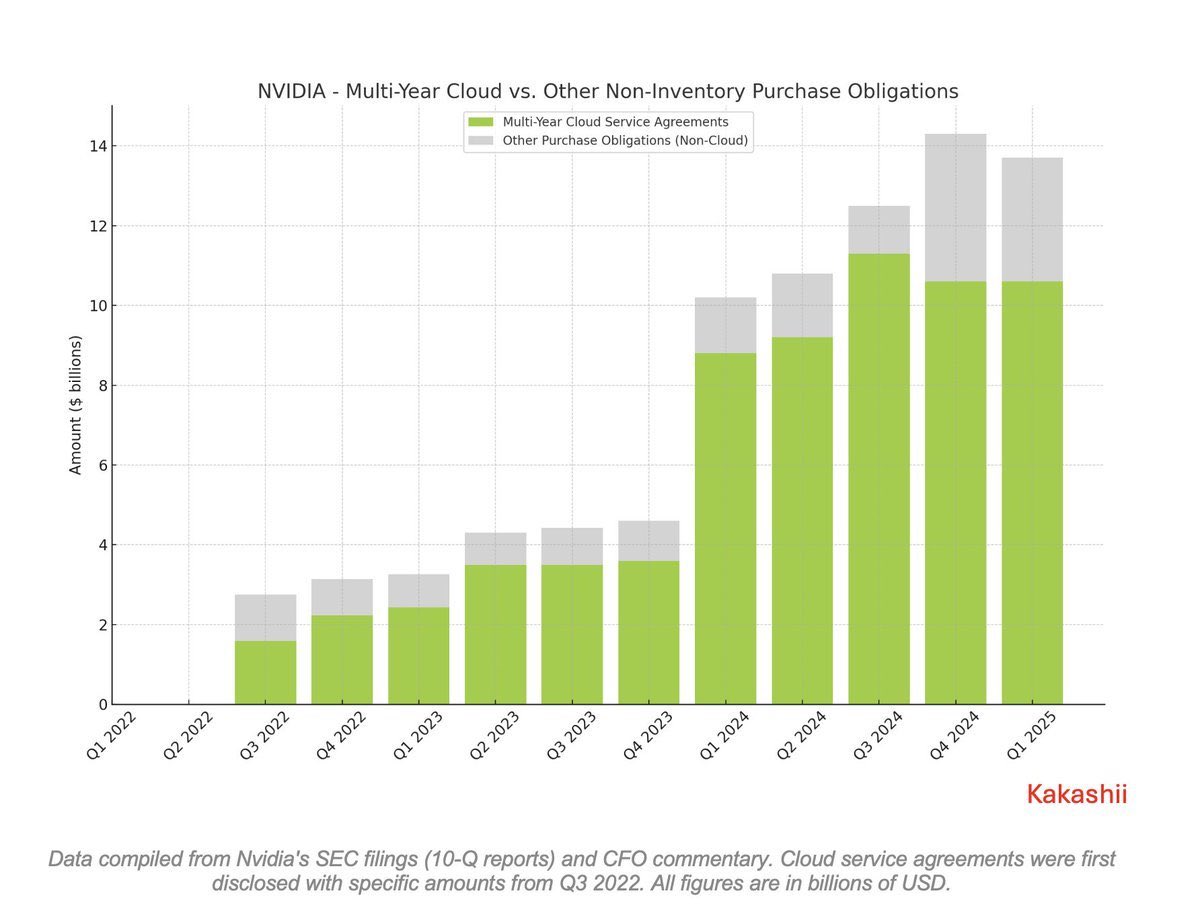

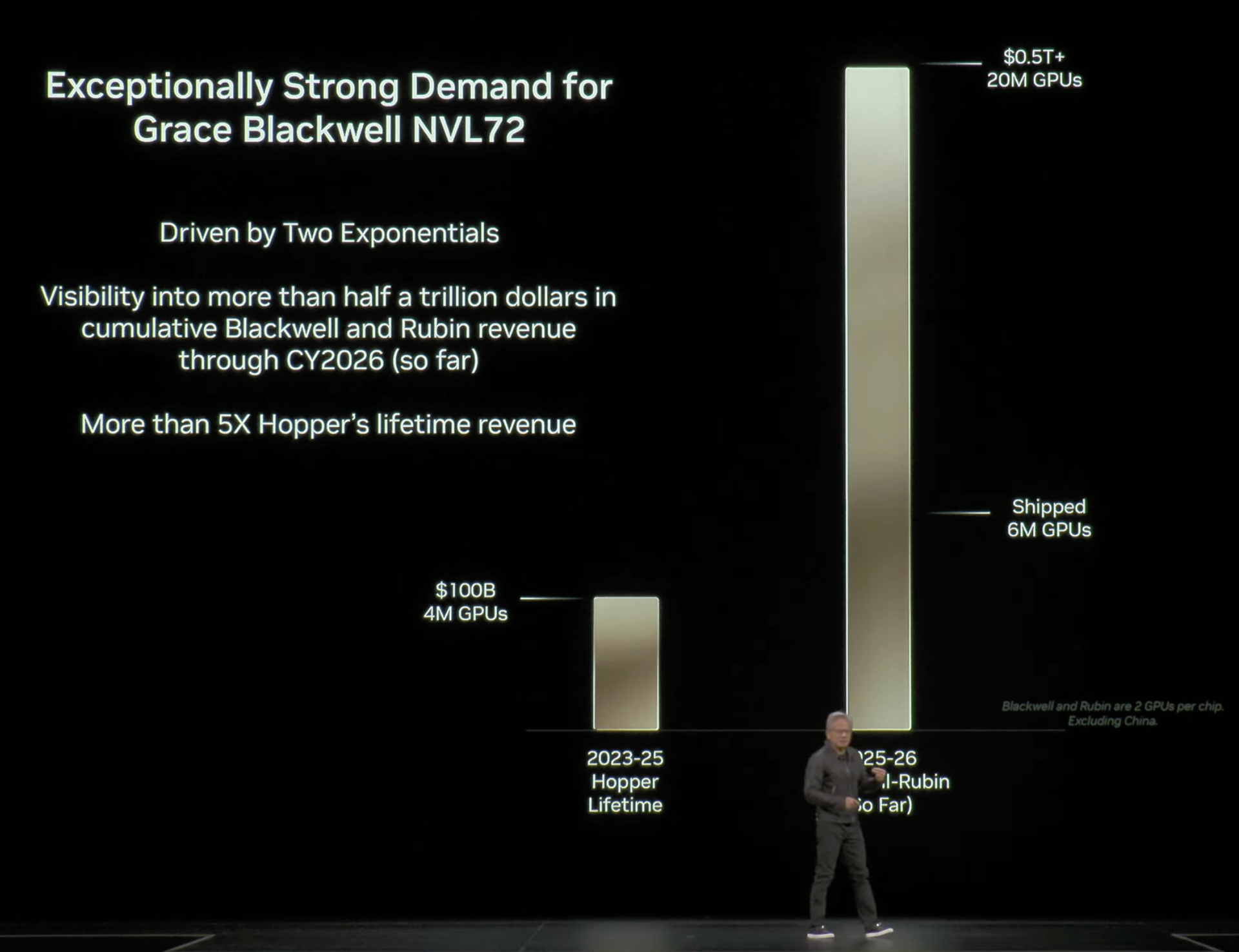

“Blackwell sales are off the charts, and cloud GPUs are sold out,” said Jensen Huang, founder and CEO of NVIDIA. “Compute demand keeps accelerating and compounding across training and inference — each growing exponentially. We’ve entered the virtuous cycle of AI. The AI ecosystem is scaling fast — with more new foundation model makers, more AI startups, across more industries, and in more countries. AI is going everywhere, doing everything, all at once.”

Data Center revenue for the third quarter was a record $51.2 billion, up 66% from a year ago and up 25% sequentially, driven by three platform shifts - accelerated computing, powerful AI models, and agentic applications.

Inventory was $19.8 billion, up from $15.0 billion sequentially, and total supply-related commitments were $50.3 billion. We are ordering to secure long lead-time components, meet the demand for Blackwell, and support future architecture ramps.

Multi-year cloud service agreements were $26.0 billion, up from $12.6 billion sequentially, to support our research and development efforts and DGX™ Cloud offerings.

In the third quarter of fiscal year 2026, we entered into an agreement to guarantee a partner's facility lease obligations in the event of their default. The agreement allows our partner to secure a limited-availability facility lease backed by our credit profile, in exchange for issuing us warrants. The maximum gross exposure is $860 million, which is reduced as the partner makes payments to the lessor over five years. The partner has placed $470 million in escrow and executed an agreement to sell the data center cloud capacity, mitigating our default risk. If the escrow and cloud capacity agreement are not sufficient to cover an event of default, we have the option to assume the lease for internal use or sublease. The guarantee, classified as a credit derivative with changes in fair value recognized in Other income and expense, has an insignificant fair value.

By executing our annual product cadence and extending our performance leadership through full stack design, we believe NVIDIA Corporation will be the superior choice for the $3 to $4 trillion in annual AI infrastructure build we estimate by the end of the decade.

The clouds are sold out, and our GPU installed base, both new and previous generations, including Blackwell, Hopper, and Ampere, is fully utilized.

The world hyperscalers, a trillion-dollar industry, are transforming search recommendations, and content understanding from classical machine learning to generative AI.

At Meta, AI recommendation systems are delivering higher quality and more relevant content, leading to more time spent on apps such as Facebook and Threads.

Analyst expectations for the top CSPs and hyperscalers in 2026 aggregate CapEx have continued to increase and now sit roughly at $600 billion, more than $200 billion higher relative to the start of the year.

We see the transition to accelerated computing and generative AI across current hyperscale workloads contributing toward roughly half of our long-term opportunity. Another growth pillar is the ongoing increase in compute spend driven by foundation model builders such as Anthropic, Mastral, OpenAI, Reflection, Safe Superintelligence, Thinking Machines Lab, and xAI.

The three scaling laws pretraining, post-training, and inference remain intact.

OpenAI recently shared that their weekly user base has grown to 800 million. Enterprise customers have increased to 1 million, and their gross margins were healthy.

Anthropic recently reported that its annualized run rate revenue has reached $7 billion as of last month, up from $1 billion at the start of the year.

The world's most important enterprise software platforms like ServiceNow, CrowdStrike, and SAP are integrating NVIDIA Corporation's accelerated computing and AI stack.

Lilly's AI factory for drug discovery, the pharmaceutical industry's most powerful data center.

We are working on a strategic partnership with OpenAI focused on helping them build and deploy at least 10 gigawatts of AI data centers. In addition, we have the opportunity to invest in the company. We serve OpenAI, through their cloud partners. Microsoft Azure, OCI, and CoreWeave.

Yesterday, celebrated an announcement with Anthropic. For the first time, Anthropic is adopting NVIDIA Corporation and we are establishing a deep technology partnership to support Anthropics fast growth. We will collaborate to optimize anthropic models for CUDA, and deliver the best possible performance, efficiency, and TCO. We will also optimize future NVIDIA Corporation architectures for anthropic workloads. Anthropics compute commitment is initially including up to one gigawatt of compute capacity, with Grace Blackwell and Vera Rubin systems.

Physical AI is already a multibillion dollar business addressing a multitrillion dollar opportunity, and the next leg of growth for NVIDIA Corporation. Leading US manufacturers and robotics innovators are leveraging NVIDIA Corporation's three computer architecture to train on NVIDIA Corporation. Test on Omniverse computer, and deploy real world AI on Justin robotic computers.

The transition to accelerated computing is foundational and necessary. Essential in a post-Moore's law era. The transition to generative AI is transformational, and necessary supercharging existing applications and business models. And the transition to agentic and physical AI will be revolutionary, giving rise to new applications, companies, products, services. As you consider infrastructure investments, consider these three fundamental dynamics. Each will contribute to infrastructure growth in the coming years.

just today, I was reading a text from Dennis, and he was saying that pre-training and post-training are fully intact. You know?

Of course, rather than giving up a share of our company, we get a share of their company. We invested in them in one of the most consequential once-in-a-generation companies, once-in-a-generation company that we have a share of.

We run OpenAI. We run Anthropic. We run xAI because of our deep partnership with Elon and xAI. We were able to bring that opportunity to Saudi Arabia, to the KSA, so that HUMAIN could also be hosting opportunity for xAI. We run xAI. We run Gemini. We run Thinking Machines.

Q2 FY2026

There were no H20 sales to China-based customers in the second quarter. NVIDIA benefited from a $180 million release of previously reserved H20 inventory, from approximately $650 million in unrestricted H20 sales to a customer outside of China.

During the first half of fiscal 2026, NVIDIA returned $24.3 billion to shareholders in the form of shares repurchased and cash dividends. As of the end of the second quarter, the company had $14.7 billion remaining under its share repurchase authorization. On August 26, 2025, the Board of Directors approved an additional $60.0 billion to the Company’s share repurchase authorization, without expiration.

NVIDIA will pay its next quarterly cash dividend of $0.01 per share on October 2, 2025, to all shareholders of record on September 11, 2025.

- Audio Webcast

- CFO Commentary

- NVIDIA Quarterly Revenue Trend

- Form 10-Q/Form 10-K

- Earnings Call Transcript

China declined on a sequential basis to low single-digit percentage of data center revenue. Note, our Q3 outlook does not include H20 shipments to China customers. Singapore revenue represented 22% of second quarter's billed revenue as customers have centralized their invoicing in Singapore. Over 99% of data center compute revenue billed to Singapore was for U.S.-based customers.

Blackwell is coming to GeForce NOW in September. This is GeForce NOW's most significant upgrade, offering RTX 5080 cost performance, minimal latency and 5K resolution at 120 frames per second. We are also doubling the GeForce NOW catalog to over 4,500 titles, the largest library of any cloud gaming service.

While we prioritize funding our growth and strategic initiatives, in Q2, we returned $10 billion to shareholders through share repurchases and cash dividends. Our Board of Directors recently approved a $60 billion share repurchase authorization to add to our remaining $14.7 billion of authorization at the end of Q2.

In closing, let me highlight upcoming events for the financial community. We will be at the Goldman Sachs Technology Conference on September 8 in San Francisco. Our annual NDR will commence the first part of October. GTC data center begins on October 27, with Jensen's keynote scheduled for the 28.

Over the next 5 years, we're going to scale into with Blackwell, with Rubin and follow-ons to scale into effectively a $3 trillion to $4 trillion AI infrastructure opportunity. The last couple of years, you have seen that CapEx has grown in just the top 4 CSPs by -- has doubled and grown to about $600 billion. So we're in the beginning of this build-out, and the AI technology advances has really enabled AI to be able to adopt and solve problems to many different industries.

Several of your large customers already have or are planning many ASIC projects. I think 1 of your ASIC competitors, Broadcom, signaled that they could grow their AI business almost 55%, 60% next year.

The China market, I've estimated to be about $50 billion of opportunity for us this year if we were able to address it with competitive products. And if it's $50 billion this year, you would expect it to grow, say, 50% per year.

It is the second largest computing market in the world, and it is also the home of AI researchers. About 50% of the world's AI researchers are in China. The vast majority of the leading open source models are created in China.

We now offer 3 networking technologies. One is for scale up. One is for scale out and one for scale across.

Scale up is so that we could build the largest possible virtual GPU, the virtual compute node. NVLink is revolutionary. NVLink 72 is what made it possible for Blackwell to deliver such an extraordinary generational jump over Hopper's NVLink 8. At a time when we have long thinking models, agentic AI reasoning systems, the NVLink basically amplifies the memory bandwidth, which is really critical for reasoning systems. And so NVLink 72 is fantastic.

We then scale out with networking, which we have 2. We have InfiniBand, which is unquestionably the lowest latency, the lowest jitter, the best scale-out network. It does require more expertise in managing those networks. And for supercomputing, for the leading model makers, InfiniBand, Quantum InfiniBand is the unambiguous choice. If you were to benchmark an AI factory, the ones with InfiniBand are the best performance.

For those who would like to use Ethernet because their whole data center is built with Ethernet, we have a new type of Ethernet called Spectrum Ethernet. Spectrum Ethernet is not off the shelf. It has a whole bunch of new technologies designed for low latency and low jitter and congestion control. And it has the ability to come closer, much, much closer to InfiniBand than anything that's out there. And that is -- we call that Spectrum-X Ethernet.

And then finally, we have Spectrum-XGS, a giga scale for connecting multiple data centers, multiple AI factories into a super factory, a gigantic system. And you're going to see that networking obviously is very important in AI factories. In fact, choosing the right networking, the performance, the throughput improvement, going from 65% to 85% or 90%, that kind of step-up because of your networking capability effectively makes networking free. Choosing the right networking, you'll get a return on it like you can't believe because the AI factory, a gigawatt, as I mentioned before, could be $50 billion. And so the ability to improve the efficiency of that factory by tens of percent is -- results in $10 billion, $20 billion worth of effective benefit. And so this -- the networking is a very important part of it.

It's the reason why NVIDIA dedicates so much in networking. That's the reason why we purchased Mellanox 5.5 years ago. And Spectrum-X, as we mentioned earlier, is now quite a sizable business, and it's only about 1.5 years old. So Spectrum-X is a home run. All 3 of them are going to be fantastic. NVLink scale up, Spectrum-X and InfiniBand scale out, and then Spectrum-XGS for scale across.

One shot chatbots have evolved into reasoning agentic AI that research, plan and use tools, driving orders of magnitude jump in compute for both training and inference. Agentic AI is reaching maturity and has opened the enterprise market to build domain and company-specific AI agents for enterprise workflows, products and services.

Q1 FY2026

On April 9, 2025, NVIDIA was informed by the U.S. government that a license is required for exports of its H20 products into the China market. As a result of these new requirements, NVIDIA incurred a $4.5 billion charge in the first quarter of fiscal 2026 associated with H20 excess inventory and purchase obligations as the demand for H20 diminished. Sales of H20 products were $4.6 billion for the first quarter of fiscal 2026 prior to the new export licensing requirements. NVIDIA was unable to ship an additional $2.5 billion of H20 revenue in the first quarter.

For the quarter, GAAP and non-GAAP gross margins were 60.5% and 61.0%, respectively. Excluding the $4.5 billion charge, first quarter non-GAAP gross margin would have been 71.3%.

For the quarter, GAAP and non-GAAP earnings per diluted share were $0.76 and $0.81, respectively. Excluding the $4.5 billion charge and related tax impact, first quarter non-GAAP diluted earnings per share would have been $0.96.

NVIDIA will pay its next quarterly cash dividend of $0.01 per share on July 3, 2025, to all shareholders of record on June 11, 2025.

We utilized cash of $14.3 billion towards shareholder returns, including $14.1 billion in share repurchases and $244 million in cash dividends.

Q4 FY2025

Hugging Face alone hosts over 90,000 derivatives freighted from the Llama foundation model. The scale of post-training and model customization is massive and can collectively demand orders of magnitude, more compute than pretraining. Our inference demand is accelerating, driven by test time scaling and new reasoning models like OpenAI's o3, DeepSeek-R1, and Grok 3. Long-thinking reasoning AI can require 100x more compute per task compared to one-shot inferences.

NVIDIA's automotive vertical revenue is expected to grow to approximately $5 billion this fiscal year.

France's EUR 100 billion AI investment and the EU's EUR 200 billion invest AI initiatives offer a glimpse into the build-out to set redefined global AI infrastructure in the coming years.

Now, as a percentage of total Data Center revenue, data center sales in China remained well below levels seen on the onset of export controls. Absent any change in regulations, we believe that China shipments will remain roughly at the current percentage. The market in China for data center solutions remains very competitive.

...

China is approximately the same percentage as Q4 and as previous quarters. It's about half of what it was before the export control. But it's approximately the same in percentage.

We expect networking to return to growth in Q1.

(Gaming) However, Q4 shipments were impacted by supply constraints. We expect strong sequential growth in Q1 as supply increases. The new GeForce RTX 50 Series desktop and laptop GPUs are here.

As Blackwell ramps, we expect gross margins to be in the low 70s. Initially, we are focused on expediting the manufacturing of Blackwell systems to meet strong customer demand as they race to build out Blackwell infrastructure. When fully ramped, we have many opportunities to improve the cost, and gross margin will improve and return to the mid-70s, late this fiscal year.

Jensen Huang:

And when you have a data center that allows you to configure and use your data center based on are you doing more pretraining now, post-training now, or scaling out your inference, our architecture is fungible and easy to use in all of those different ways.

Jensen Huang

We know several things, Vivek. We have a fairly good line of sight of the amount of capital investment that data centers are building out toward. We know that going forward, the vast majority of software is going to be based on machine learning. And so, accelerated computing and generative AI, reasoning AI are going to be the type of architecture you want in your data center.

We have, of course, forecasts and plans from our top partners. And we also know that there are many innovative, really exciting start-ups that are still coming online as new opportunities for developing the next breakthroughs in AI, whether it's agentic AIs, reasoning AI, or physical AIs. The number of start-ups are still quite vibrant and each one of them needs a fair amount of computing infrastructure. And so, I think the -- whether it's the near-term signals or the midterm signals, near-term signals, of course, are POs and forecasts and things like that.

Midterm signals would be the level of infrastructure and capex scale-out compared to previous years. And then the long-term signals has to do with the fact that we know fundamentally software has changed from hand-coding that runs on CPUs to machine learning and AI-based software that runs on GPUs and accelerated computing systems. And so, we have a fairly good sense that this is the future of software. And then maybe as you roll it out, another way to think about that is we've really only tapped consumer AI and search and some amount of consumer generative AI, advertising, recommenders, kind of the early days of software.

The next wave is coming, agentic AI for enterprise, physical AI for robotics, and sovereign AI as different regions build out their AI for their own ecosystems. And so, each one of these are barely off the ground, and we can see them. We can see them because, obviously, we're in the center of much of this development and we can see great activity happening in all these different places and these will happen. So, near term, midterm, long term.

Jensen Huang

As you know, the first Blackwell was we had a hiccup that probably cost us a couple of months. We're fully recovered, of course.

...

And the click after that is called Vera Rubin and all of our partners are getting up to speed on the transition of that and so preparing for that transition. And again, we're going to provide a big, huge step-up. And so, come to GTC, and I'll talk to you about Blackwell Ultra, Vera Rubin and then show you what we place after that. Really exciting new products to come to GTC piece.

Jensen Huang

Well, we built very different things than ASICs, in some ways, completely different in some areas we intercept. We're different in several ways.

One, NVIDIA'S architecture is general whether you've optimized for unaggressive models or diffusion-based models or vision-based models or multimodal models, or text models. We're great in all of it. We're great on all of it because our software stack is so -- our architecture is sensible. Our software stack ecosystem is so rich that we're the initial target of most exciting innovations and algorithms. And so, by definition, we're much, much more general than narrow.

We're also really good from the end-to-end from data processing, the curation of the training data, to the training of the data, of course, to reinforcement learning used in post-training, all the way to inference with tough time scaling.

So, we're general, we're end-to-end, and we're everywhere. And because we're not in just one cloud, we're in every cloud, we could be on-prem. We could be in a robot. Our architecture is much more accessible and a great target initial target for anybody who's starting up a new company. And so, we're everywhere.

And the third thing I would say is that our performance in our rhythm is so incredibly fast. Remember that these data centers are always fixed in size. They're fixed in size or they're fixing power. And if our performance per watt is anywhere from 2x to 4x to 8x, which is not unusual, it translates directly to revenues. And so, if you have a 100-megawatt data center, if the performance or the throughput in that 100-megawatt or the gigawatt data center is four times or eight times higher, your revenues for that gigawatt data center is eight times higher. And the reason that is so different than data centers of the past is because AI factories are directly monetizable through its tokens generated. And so, the token throughput of our architecture being so incredibly fast is just incredibly valuable to all of the companies that are building these things for revenue generation reasons and capturing the fast ROI. And so, I think the third reason is performance.

And then the last thing that I would say is the software stack is incredibly hard. Building an ASIC is no different than what we do. We build a new architecture. And the ecosystem that sits on top of our architecture is 10 times more complex today than it was two years ago. And that's fairly obvious because the amount of software that the world is building on top of architecture is growing exponentially and AI is advancing very quickly. So, bringing that whole ecosystem on top of multiple chips is hard.

And so, I would say that -- those four reasons. And then finally, I will say this, just because the chip is designed doesn't mean it gets deployed. And you've seen this over and over again. There are a lot of chips that get built, but when the time comes, a business decision has to be made, and that business decision is about deploying a new engine, a new processor into a limited AI factory in size, in power, and in fine. And our technology is not only more advanced, more performance, it has much, much better software capability and very importantly, our ability to deploy is lightning fast.

And so, these things are enough for the faint of heart, as everybody knows now. And so, there's a lot of different reasons why we do well, why we win.

Jensen Huang

With respect to geographies, the takeaway is that AI is software. It's modern software. It's incredible modern software, but it's modern software and AI has gone mainstream.

...

And so, I'm fairly sure that we're in the beginning of this new era. And then lastly, no technology has ever had the opportunity to address a larger part of the world's GDP than AI. No software tool ever has. And so, this is now a software tool that can address a much larger part of the world's GDP more than any time in history.

And so, the way we think about growth, and the way we think about whether something is big or small has to be in the context of that. And when you take a step back and look at it from that perspective, we're really just in the beginning.

Jensen Huang

And so, the second part is how do we see the growth of enterprise or not CSPs, if you will, going forward? And the answer is, I believe, long term, it is by far larger and the reason for that is because if you look at the computer industry today and what is not served by the computer industry is largely industrial.

So, let me give you an example. When we say enterprise, and let's use the car company as an example because they make both soft things and hard things. And so, in the case of a car company, the employees will be what we call enterprise and agentic AI and software planning systems and tools, and we have some really exciting things to share with you guys at GTC, build agentic systems are for employees to make employees more productive to design to market plan to operate their company. That's agentic AI.

On the other hand, the cars that they manufacture also need AI. They need an AI system that trains the cars, treats this entire giant fleet of cars. And today, there's 1 billion cars on the road. Someday, there will be 1 billion cars on the road, and every single one of those cars will be robotic cars, and they'll all be collecting data, and we'll be improving them using an AI factory.

Whereas they have a car factory today, in the future, they'll have a car factory and an AI factory. And then inside the car itself is a robotic system. And so, as you can see, there are three computers involved and there's the computer that helps the people. There's the computer that build the AI for the machineries that could be, of course, could be a tractor, it could be a lawn mower.

It could be a human or robot that's being developed today. It could be a building. It could be a warehouse. These physical systems require new type of AI we call physical AI.

They can't just understand the meaning of words and languages, but they have to understand the meaning of the world, friction and inertia, object permanence, and cause and effect. And all of those type of things that are common sense to you and I, but AIs have to go learn those physical effects. So, we call that physical AI.

That whole part of using agentic AI to revolutionize the way we work inside companies, that's just starting.This is now the beginning of the agentic AI era, and you hear a lot of people talking about it, and we got some really great things going on. And then there's the physical AI after that, and then there are robotic systems after that. And so, these three computers are all brand new. And my sense is that long term, this will be by far the larger of a mall, which kind of makes sense.

The world's GDP is represented by either heavy industries or industrials and companies that are providing for those.

We delivered $11.0 billion of Blackwell architecture revenue in the fourth quarter of fiscal 2025, the fastest product ramp in our company’s history. Blackwell sales were led by large cloud service providers which represented approximately 50% of our Data Center revenue.

Gaming revenue for the fourth quarter was down 11% from a year ago and down 22% sequentially, due to limited supply for both Blackwell and Ada GPUs.

Net gains from non-marketable and publicly-held equity securities for the fourth quarter were $727 million, reflecting fair value adjustments and sales of equity investments.

Q3 FY2025

Vivek Arya:

Jensen, my main question, historically, when we have seen hardware deployment cycles, they have inevitably included some digestion along the way. When do you think we get to that phase? Or is it just too premature to discuss that because you're just at the start of Blackwell? So, how many quarters of shipments do you think is required to kind of satisfy this first wave? Can you continue to grow this into calendar '26? Just how should we be prepared to see what we have seen historically, right, a period of digestion along the way of a long-term kind of secular hardware deployment?

Jensen Huang:

The way to think through that, Vivek, is I believe that there will be no digestion until we modernize $1 trillion with the data centers. Those -- if you just look at the world's data centers, the vast majority of it is built for a time when we wrote applications by hand and we ran them on CPUs. It's just not a sensible thing to do anymore. If you have -- if every company's capex -- if they're ready to build data center tomorrow, they ought to build it for a future of machine learning and generative AI because they have plenty of old data centers.

And so, what's going to happen over the course of the next X number of years, and let's assume that over the course of four years, the world's data centers could be modernized as we grow into IT, as you know, IT continues to grow about 20%, 30% a year, let's say. But let's say by 2030, the world's data centers for computing is, call it, a couple of trillion dollars. We have to grow into that. We have to modernize the data center from coding to machine learning.

That's number one. The second part of it is generative AI. And we're now producing a new type of capability the world's never known, a new market segment that the world's never had. If you look at OpenAI, it didn't replace anything.

It's something that's completely brand new. It's, in a lot of ways as when the iPhone came, was completely brand new. It wasn't really replacing anything. And so, we're going to see more and more companies like that.

And they're going to create and generate, out of their services, essentially intelligence. Some of it would be digital artist intelligence like Runway. Some of it would be basic intelligence like OpenAI. Some of it would be legal intelligence like Harvey, digital marketing intelligence like Rider's, so on and so forth.

And the number of these companies, these -- what are they called, AI-native companies, are just in hundreds. And almost every platform shift, there was -- there were Internet companies, as you recall. There were cloud-first companies. There were mobile-first companies.

Now, they're AI natives. And so, these companies are being created because people see that there's a platform shift, and there's a brand-new opportunity to do something completely new. And so, my sense is that we're going to continue to build out to modernize IT, modernize computing, number one; and then number two, create these AI factories that are going to be for a new industry for the production of artificial intelligence.

Q2 FY2025

Our sovereign AI opportunities continue to expand as countries recognize AI expertise and infrastructure at national imperatives for their society and industries.

Remember that computing is going through two platform transitions at the same time and that's just really, really important to keep your mind focused on, which is general-purpose computing is shifting to accelerated computing and human engineered software is going to transition to generative AI or artificial intelligence learned software.

And for NVIDIA's software TAM can be significant as the CUDA-compatible GPU installed-base grows from millions to tens of millions. And as Colette mentioned, NVIDIA software will exit the year at a $2 billion run rate.

- NVIDIA Corporation (NVDA) Q2 2025 Earnings Press Releases

- NVIDIA Corporation (NVDA) Q2 2025 Earnings CFO Commentary

- NVIDIA Corporation (NVDA) Q2 2025 Quarterly Revenue Trend

Q1 FY2025

We are fundamentally changing how computing works and what computers can do, from general purpose CPU to GPU accelerated computing, from instruction-driven software to intention-understanding models, from retrieving information to performing skills, and at the industrial level, from producing software to generating tokens, manufacturing digital intelligence.

~ Jensen Huang

- NVIDIA Corporation (NVDA) Q1 2025 Earnings Press Releases

- NVIDIA Corporation (NVDA) Q1 2025 Earnings CFO Commentary

- NVIDIA Corporation (NVDA) Q1 2025 Quarterly Revenue Trend

- NVIDIA Corporation (NVDA) Q1 2025 Quarterly Presentation

Q4 FY2024

- NVIDIA Corporation (NVDA) Q4 2024 Earnings Call Transcript

- NVIDIA Corporation (NVDA) Q4 2024 Earnings Press Releases

- NVIDIA Corporation (NVDA) Q4 2024 Earnings CFO Commentary

- NVIDIA Corporation (NVDA) Q4 2024 Quarterly Revenue Trend

- NVIDIA Corporation (NVDA) Q4 2024 Quarterly Presentation

Q3 FY2024

- NVIDIA Corporation (NVDA) Q3 2024 Earnings Call Transcript

- NVIDIA Corporation (NVDA) Q3 2024 Earnings Press Releases

- NVIDIA Corporation (NVDA) Q3 2024 Earnings CFO Commentary

- NVIDIA Corporation (NVDA) Q3 2024 Quarterly Revenue Trend

- NVIDIA Corporation (NVDA) Q3 2024 Quarterly Presentation

Q2 FY2024

- NVIDIA Corporation (NVDA) Q2 2024 Earnings Call Transcript

- NVIDIA Corporation (NVDA) Q2 2024 Earnings Press Releases

- NVIDIA Corporation (NVDA) Q2 2024 Earnings CFO Commentary

- NVIDIA Corporation (NVDA) Q2 2024 Quarterly Revenue Trend

- NVIDIA Corporation (NVDA) Q2 2024 Quarterly Presentation

Q1 FY2024

- NVIDIA Corporation (NVDA) Q1 2024 Earnings Call Transcript

- NVIDIA Corporation (NVDA) Q1 2024 Earnings Press Releases

- NVIDIA Corporation (NVDA) Q1 2024 Earnings CFO Commentary

- NVIDIA Corporation (NVDA) Q1 2024 Quarterly Revenue Trend

- NVIDIA Corporation (NVDA) Q1 2024 Quarterly Presentation

Q4 FY2023

- NVIDIA Corporation (NVDA) Q4 2023 Earnings Call Transcript

- NVIDIA Corporation (NVDA) Q4 2023 Earnings Press Releases

- NVIDIA Corporation (NVDA) Q4 2023 Earnings CFO Commentary

- NVIDIA Corporation (NVDA) Q4 2023 Quarterly Revenue Trend

- NVIDIA Corporation (NVDA) Q4 2023 Quarterly Presentation

Q3 FY2023

- NVIDIA Corporation (NVDA) Q3 2023 Earnings Call Transcript

- NVIDIA Corporation (NVDA) Q3 2023 Earnings Press Releases

- NVIDIA Corporation (NVDA) Q3 2023 Earnings CFO Commentary

- NVIDIA Corporation (NVDA) Q3 2023 Quarterly Revenue Trend

- NVIDIA Corporation (NVDA) Q3 2023 Quarterly Presentation

Q2 FY2023

- NVIDIA Corporation (NVDA) Q2 2023 Earnings Call Transcript

- NVIDIA Corporation (NVDA) Q2 2023 Earnings Press Releases

- NVIDIA Corporation (NVDA) Q2 2023 Earnings CFO Commentary

- NVIDIA Corporation (NVDA) Q2 2023 Quarterly Revenue Trend

- NVIDIA Corporation (NVDA) Q2 2023 Quarterly Presentation

Q1 FY2023

- NVIDIA Corporation (NVDA) Q1 2023 Earnings Call Transcript

- NVIDIA Corporation (NVDA) Q1 2023 Earnings Press Releases

- NVIDIA Corporation (NVDA) Q1 2023 Earnings CFO Commentary

- NVIDIA Corporation (NVDA) Q1 2023 Quarterly Revenue Trend

- NVIDIA Corporation (NVDA) Q1 2023 Quarterly Presentation

Q4 FY2022

- NVIDIA Corporation (NVDA) Q4 2022 Earnings Call Transcript

- NVIDIA Corporation (NVDA) Q4 2022 Earnings Press Releases

- NVIDIA Corporation (NVDA) Q4 2022 Earnings CFO Commentary

- NVIDIA Corporation (NVDA) Q4 2022 Quarterly Revenue Trend

- NVIDIA Corporation (NVDA) Q4 2022 Quarterly Presentation

Q3 FY2022

- NVIDIA Corporation (NVDA) Q3 2022 Earnings Call Transcript

- NVIDIA Corporation (NVDA) Q3 2022 Earnings Press Releases

- NVIDIA Corporation (NVDA) Q3 2022 Earnings CFO Commentary

- NVIDIA Corporation (NVDA) Q3 2022 Quarterly Revenue Trend

- NVIDIA Corporation (NVDA) Q3 2022 Quarterly Presentation

Q2 FY2022

- NVIDIA Corporation (NVDA) Q2 2022 Earnings Call Transcript

- NVIDIA Corporation (NVDA) Q2 2022 Earnings Press Releases

- NVIDIA Corporation (NVDA) Q2 2022 Earnings CFO Commentary

- NVIDIA Corporation (NVDA) Q2 2022 Quarterly Revenue Trend

- NVIDIA Corporation (NVDA) Q2 2022 Quarterly Presentation

Q1 FY2022

- NVIDIA Corporation (NVDA) Q1 2022 Earnings Call Transcript

- NVIDIA Corporation (NVDA) Q1 2022 Earnings Press Releases

- NVIDIA Corporation (NVDA) Q1 2022 Earnings CFO Commentary

- NVIDIA Corporation (NVDA) Q1 2022 Quarterly Revenue Trend

- NVIDIA Corporation (NVDA) Q1 2022 Quarterly Presentation

Q4 FY2021

- NVIDIA Corporation (NVDA) Q4 2021 Earnings Call Transcript

- NVIDIA Corporation (NVDA) Q4 2021 Earnings Press Releases

- NVIDIA Corporation (NVDA) Q4 2021 Earnings CFO Commentary

- NVIDIA Corporation (NVDA) Q4 2021 Quarterly Revenue Trend

- NVIDIA Corporation (NVDA) Q4 2021 Quarterly Presentation

Q3 FY2021

- NVIDIA Corporation (NVDA) Q3 2021 Earnings Call Transcript

- NVIDIA Corporation (NVDA) Q3 2021 Earnings Press Releases

- NVIDIA Corporation (NVDA) Q3 2021 Earnings CFO Commentary

- NVIDIA Corporation (NVDA) Q3 2021 Quarterly Revenue Trend

- NVIDIA Corporation (NVDA) Q3 2021 Quarterly Presentation